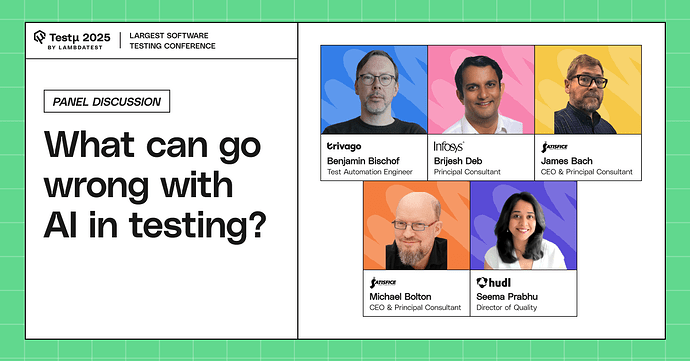

Join Benjamin Bischof, Brijesh Deb, James Bach, Michael Bolton, and Seema Prabhu as they explore the real challenges of using AI in software testing.

Discover the technical pitfalls, ethical risks, and consequences of over-relying on AI, including bias, blind spots, and testing AI systems themselves.

Learn practical insights and guardrails for using AI responsibly while maintaining quality and accountability in testing workflows.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What’s the most common mistake teams make when they start using AI in testing?

How should organizations integrate AI into testing while safeguarding human expertise and sustaining high team performance?

Could you give a concrete example of how a biased AI model used in testing, perhaps for visual validation or defect triage, could lead to a product that harms or excludes a specific group of users, creating a new kind of ‘ethical bug’?

What strategies help ensure that leveraging AI in testing enhances—rather than replaces, human skills and critical thinking?

How can we trust but verify AI-generated test cases or defect

From your various perspectives and experience, what is the worst case scenarios as an outcome from AI testing gone wrong?

How can organizations avoid the pitfalls of over-reliance on AI in testing, preventing a decline in human expertise, critical thinking skills, and overall team performance?

When an AI tool autonomously gives a ‘go/no-go’ signal for a release, who is ultimately accountable for a missed critical bug? What is the most crucial ‘guardrail’ a team must implement before ceding this level of control?

What safeguards are necessary to prevent AI testing tools from perpetuating biases or making incorrect assumptions in critical systems?

How agent safety can be tested ? Since now most of test automation relies on agents , is there any standard to test the agent safety.

AI will test only what its been feed and can go wrong if the information fed in AI database is incorrect. But how people can judge if they themself are not aware and they totally rely on AI ?

What is the optimal balance between human oversight and AI autonomy in critical testing processes, especially where AI decisions might have significant real-world consequences?

If an AI agent autonomously approves, rejects, or prioritizes features, who carries the accountability for that decision? Testers, developers, or leadership? How should testing practices adapt to surface ethical risks?

Could it be that AI is creating qualitative improvements that don’t show up in standard productivity statistics?

What are some of the best practices for building an Agent that understands your coding style?

How can bias in training data affect AI-generated test cases or prioritization?

What’s one surprising way AI testing can fail at finding bugs?

How do we detect and correct skewed AI behavior in testing outputs?

As AI takes a larger role in testing, what are the most common pitfalls when human oversight is reduced, and how should teams balance autonomy with accountability?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now