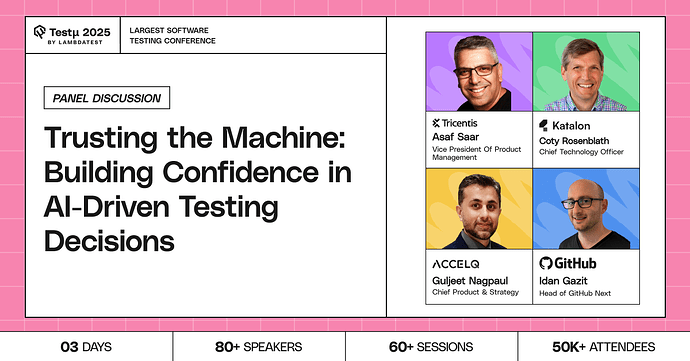

Join industry leaders Asaf Saar, Coty Rosenblath, Guljeet Nagpaul, and Idan Gazit as they unpack how to build trust in AI-driven testing decisions in an era where intelligent machines are reshaping quality engineering.

Discover strategies for validating AI outputs, improving explainability, managing risks, and fostering transparency when using AI for test prioritization, flakiness detection, autonomous test creation, and beyond.

Learn how human-in-the-loop approaches, frameworks, and guardrails can ensure AI becomes a trusted partner rather than a black box, bridging the gap between innovation and reliability in modern testing practices.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How do you measure the reliability of AI-driven testing, and are there situations where human oversight is still essential?

What practices help teams trust AI testing results, especially when the AI suggests changes that contradict human intuition?

When a serious bug escapes detection by AI-driven QA and causes harm, is the liability on the toolmakers, the engineering team, or the organization as a whole—and how should companies prepare to handle such consequences?

How should responsibility be assigned when an AI-based testing solution fails, leading to costly defects in production, and what governance models can help manage the legal and ethical risks?

What is the single most important metric a team can use to measure the “trustworthiness” of their AI testing system, and how do you track it over time?

What is the most significant unsolved problem in AI-driven testing today that, if solved, would dramatically increase our ability to trust these systems?

How should organizations be investing in their people and skills to prepare for a future where the primary role of a QA professional is to manage, validate, and collaborate with intelligent machines?

Trust is often built on predictability. How do you build trust in AI systems that are designed to be non-deterministic and continuously learning, where their behavior might change from one day to the next?

If a critical software defect slips through AI-driven testing, ultimately causing significant harm or financial loss, where does accountability truly lie, and how should organizations navigate the legal and ethical ramifications?

How can AI be used to provide deeper insights into software quality, such as predicting potential user experience issues or identifying areas for proactive improvement?

How do you architect confidence metrics that aren’t just vanity scores but actually correlate to reduced production defects?

Many AI-driven tools are vendor-specific. How do you avoid vendor lock-in while still ensuring trust and long-term confidence?

How should AI-driven test decisioning be architected in a multi-cloud or hybrid environment, where data privacy and security laws vary across regions?

How can organizations balance automation with critical tester intuition and domain expertise?

If you had to give AI a “trust score” today for your QA process (0–100), what would it be and why?

Should AI test systems have “second opinion” modes, where results are validated by another AI or human?

If AI testing tools become black boxes, how do we ensure accountability when things go wrong?

Imagine your AI tester had a trust meter—what daily habits (logging, reasoning, accuracy checks) would make the meter go up or down?

As autonomous testing evolves, how will the role of human testers shift from directly executing tests to becoming “AI conductors” who oversee, interpret, and refine the AI’s decision-making process?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now