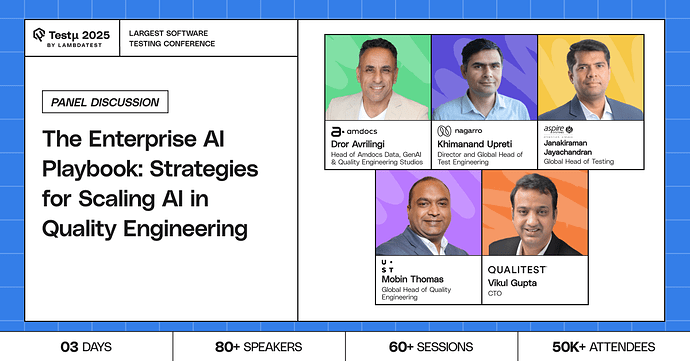

Join Dror Avrilingi, Janakiraman Jayachandran, Khimanand Upreti, Mobin Thomas, and Vikul Gupta as they share strategies for scaling AI in quality engineering across enterprises.

Discover how to move from isolated AI pilots to integrated platforms, design human-in-the-loop systems, and rewire your SDLC for predictive quality while maximizing business impact.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What’s the biggest challenge enterprises face when trying to scale AI in quality engineering?

What’s the most essential yet overlooked strategy for scaling AI that organizations often mishandle when moving past the pilot phase?

What is the one underappreciated move from your AI scaling approach that businesses tend to miss after proving out a pilot?

What QA mechanisms should be in place to ensure human-in-the-loop systems consistently balance automation with expert oversight?

What strategies help balance AI-driven automation with human expertise in testing?

How can enterprises ensure transparency and trust in AI-powered testing decisions?

How can AI improve test coverage and defect prediction in large-scale systems?

How do you prioritize which QA processes to automate with AI first?

How do enterprises continuously update and retrain AI models for evolving software needs?

What is the single most critical, non-obvious ‘play’ from your new playbook for scaling AI that most companies get wrong when they try to move beyond the successful pilot stage?

How does AI fundamentally shift the strategic role of quality engineering within an organization, from reactive bug detection to proactive quality assurance and continuous improvement?

Rewiring the SDLC for ‘predictive quality’ is a powerful idea. What is the most important data signal or metric that an organization needs to start capturing today to make that a reality in the future?

What’s the best approach (or approaches) for enterprises to effectively integrate diverse AI tools and platforms (including those with proprietary formats and interfaces) with existing QA frameworks, legacy systems, and CI/CD pipelines?

If AI is doing the testing at scale, do we risk engineers spending more time testing the AI than the product?

Given the “pilot-purgatory problem” where AI initiatives struggle to move beyond initial prototypes, what cultural and organizational strategies are most effective in helping to drive appropriate AI adoption at the enterprise level?

When scaling AI models for quality engineering across the enterprise, how can orgs proactively address the specific challenges of data quality, data availability, as well as data governance?

What would scaling look like ? Is it including different types of AI automation techniques ?

How do you align AI strategies with existing quality engineering processes and organizational goals?

How do you integrate AI-powered testing tools into legacy QA workflows without disrupting existing processes?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now