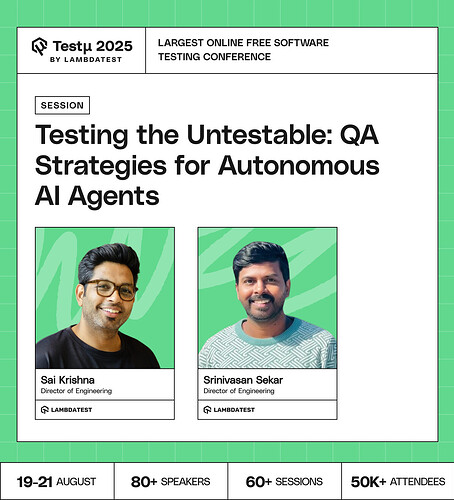

Join Sai Krishna and Srinivasan Sekar, Directors of Engineering at LambdaTest, as they share insights on testing autonomous AI agents and evolving QA practices.

Explore hybrid human-AI testing frameworks, probabilistic testing, and adversarial techniques to assess agent behavior and ensure safety, reliability, and ethical compliance.

Discover practical strategies, real-world examples, and methods to effectively test non-deterministic AI systems in production.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How can teams test early without ending up with results that don’t reflect real-world reliability?

How do you practically define and measure ‘acceptable behavior’ for a non-deterministic agent, and how do you get an automated verdict in your pipeline without a simple pass or fail?

How should QA teams design test cases when AI agents interact with external APIs or real-world data sources?

What difficulties and advantages arise when defining ground truth and measuring accuracy, coherence, and consistency in multi-agent workflows?

What are the key hurdles and potential benefits in verifying accuracy, coherence, and consistency of results produced by multi-agent workflows?

How can we design effective test strategies for agent-to-agent interactions, given their autonomous and unpredictable behaviors?

If two AI agents test each other, who do you trust more, the agents or the humans?

How do you practically define and measure ‘acceptable behavior’ for a non-deterministic agent, and how do you get an automated verdict in your pipeline without a simple pass or fail?

What metrics should we use to measure success in agent-to-agent testing, accuracy, adaptability, or the ability to surprise us with edge cases?

Wouldn’t this create a never ending loop ? Testing the agent which is testing another agent ?

What is the most important, irreplaceable role for a human tester in this new ‘Agent-to-Agent’ testing model?

What are the unique challenges and opportunities in establishing ground truth and evaluating the accuracy, coherence, and consistency of outputs in multi-agent workflows?

How do you practically define and measure ‘acceptable behavior’ for a non-deterministic agent, and how do you get an automated verdict in your pipeline without a simple pass or fail?

When agents start testing each other, how do we avoid infinite loops of ‘I found a bug in your bug report’?

If two AI agents disagree on a test result, do we call it a defect… or just let them argue it out in a Slack channel?

Can AI agents design, execute, and analyze tests for other AI agents with minimal human intervention, and what are the implications for scalability and efficiency?

How can transparency and interpretability be maintain in the testing process, to allow developers and stakeholders to understand the rationale behind agent decisions and test failures?

How can “untstable” be defined in the context of multi-agent systems, and what criteria are used to determine when agent-to-agent testing becomes the optimal or necessary approach?

What mechanisms are in place to ensure effective and secure communication among agents.

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now