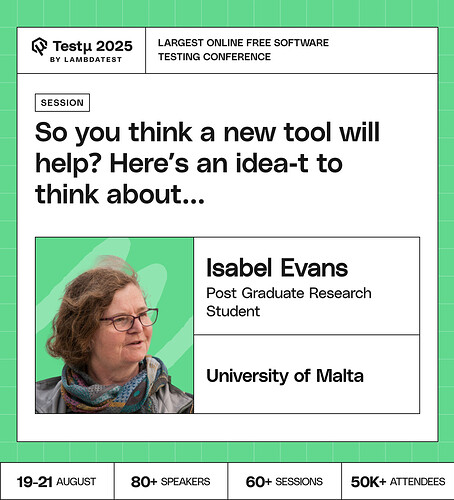

Join Isabel Evans, software quality and testing practitioner, as she shares insights from her research on how testers design, evaluate, and acquire tools.

Explore the idea-t framework, a set of heuristics backed by real-world evidence, to guide tool design and evaluation for testing teams.

Discover testers’ experiences, research findings, and practical methods to make tool decisions more effective and human-centered.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How often do your teams reflect on whether existing tools are helping or slowing you down? What about re-evaluating them?

Specifically, does the idea-t framework address the challenges identified from the3 feedback gathered?

How to decide which AI tool is best fit for testing?

How do you decide if a new tool is really worth adding, instead of just sticking with what already works?

From your experience, what’s one AI-testing initiative that exceeded expectations—and one that didn’t? What should others learn from it?

What were the key findings or successes from these trials that demonstrate the practical value and effectiveness of the idea-t heuristics?

Given that the framework offers heuristics as gateways to discovery, how does it encourage proactive and preventative measures in tool design and acquisition?

Which option reduces overall complexity: adding a new tool or refining existing workflows?

With so many AI-powered tools emerging, how do we differentiate between tools that genuinely transform testing/engineering workflows versus those that just add noise and complexity to the stack?

How did you identify the most common pain points testers face when evaluating new tools?

Once a tool is identified as potentially slowing the team down, what is the typical process for re-evaluating it? (e.g., trial period with alternatives, deep dive analysis, stakeholder input)?

How can smaller teams with limited resources still leverage the idea-t heuristics effectively?

Every new problem seems to bring a new tool, but here’s an idea to think about: are we underestimating the value of simplicity?

Have you used GenAI to build out tools based on heuristics?

Should we adopt a new tool, or evaluate the pros and cons based on our existing code structure?

How AI framework will automation in GitHub ?

How do we operationalize AI-driven exploratory testing to catch variability-induced failures without slowing high-velocity releases?

How do you balance outt the efficiency of heuristics in AI with the risk of oversimplifying complex problems? Its generally the time vs output how do you mitigate this oversimplifying risk here.

What process is followed to re-assess a tool that may be slowing the team down, trial runs, performance analysis, or decision-making with stakeholders?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now