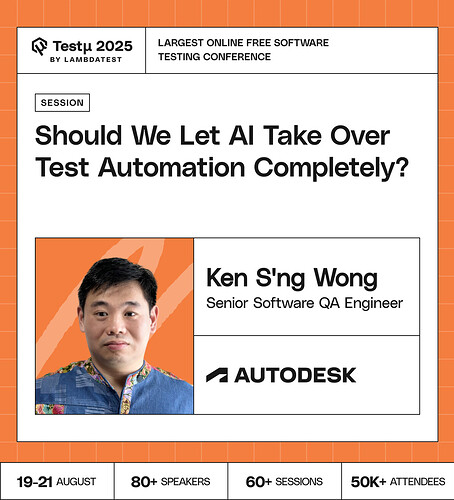

Join Ken Sng Wong as he examines whether AI can truly take over test automation completely.

Discover how AI accelerates automation with test case recommendations, pipeline scripting, and preliminary root cause analysis, while also exploring its limitations, such as hallucinations, context gaps, and incomplete coverage.

Learn why a balanced approach leveraging AI for speed and efficiency while relying on SDET engineers for validation and comprehensive coverage is key to effective test automation.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What parts of test automation are best suited for full AI takeover, and where should human expertise always stay in control?

How can organizations balance AI-driven automation with human judgment to avoid over-reliance on machines?

How can organizations systematically test, verify, and secure AI-generated code before integrating it into their automation pipelines?

What frameworks or methodologies are recommended to minimize risks and guarantee robustness in LLM-generated automation scripts?

What are some of the less obvious but impactful areas where AI could augment human testers’ capabilities, allowing them to focus on "more complex aspects of software quality?

Can you describe what the ideal day-to-day workflow looks like for an SDET who is effectively leveraging AI as a partner rather than just a code generator?

What areas of test automation are best suited for full AI ownership, and which still require human oversight?

How do we prepare teams and infrastructure for a gradual shift towards AI-centric test automation?

How can QA detect cases where the RAG pipeline provides inconsistent answers to semantically similar queries?

Since LLMs can generate incorrect code, what are the best processes or frameworks to consider to make sure of the quality and security of AI-generated automation scripts?

If AI becomes proficient at generating and verifying tests for documented specifications, will the primary role of human testers shift entirely to exploratory and “out-of-the-box” testing?

To what extent should AI be entrusted with full control over test automation in critical systems?

What percent of AI is being used in your company and what percentage or autonomy does it own?

What kind of workflows are best suited for prompt-based AI testing, and what criteria determine when manual implementation or alternative AI approaches would be more efficient?

How can SDETs effectively use these AI insights to streamline the debugging process and provide actionable feedback to developers, reducing the overall time-to-fix?

How do we prevent AI tools from generating redundant or low-value tests that inflate execution time?

How do we ensure AI-driven test automation doesn’t overfit to past defects and miss new, unseen issues?

What are the key ethical considerations and potential biases that AI models might introduce into the testing process, and what steps should development teams take to mitigate these risks and ensure fair and inclusive testing practices?

Can AI-driven automation replace exploratory testing, or is human intuition irreplaceable?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now