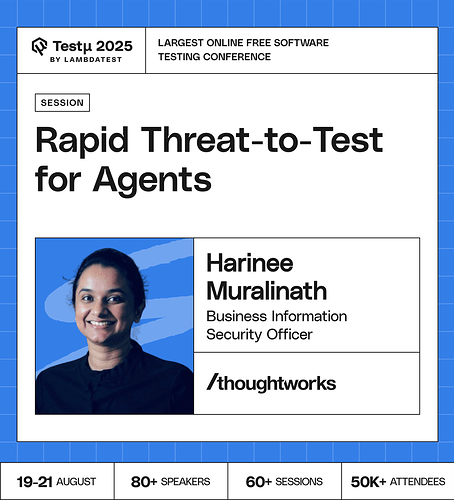

Join us for this insightful session with Harinee Muralinath, Business Information Security Officer (BISO) at Thoughtworks, as she explores how QA teams can adapt their approach when testing LLM-powered agents.

From copilots to customer service bots, as systems gain autonomy, the testing lens must expand beyond “does the output look right?” to identifying deeper risks.

Harinee will walk you through agent architecture, highlight shifting trust boundaries, and introduce a lightweight method for integrating security thinking into QA workflows.

You’ll learn how the emerging Agentic-AI threat taxonomy helps testers uncover vulnerabilities and turn them into meaningful test ideas.

Key Takeaways:

Understand where risks emerge in LLM-based agents

Understand where risks emerge in LLM-based agents

Apply a fast, practical approach to threat modelling

Apply a fast, practical approach to threat modelling

Translate threat insights into stronger test coverage

Translate threat insights into stronger test coverage

Don’t miss this session, equip yourself with the mindset and tools to test AI-driven systems with confidence! Register now!

Don’t miss this session, equip yourself with the mindset and tools to test AI-driven systems with confidence! Register now!

How can teams quickly test AI agents?

Most AI-driven testing depends on training data or past executions. How do we ensure these models don’t ‘learn’ bad practices from flaky or poorly designed tests?

Many QA teams are small, strapped for time, and already overloaded. How do we integrate threat-based testing into regular workflows without it becoming another “checklist ritual” that nobody follows in practice?

How do you measure test coverage when threats are dynamic and continuously evolving?

How can teams quickly test AI agents against real-world threats without slowing down development?

How can we design rapid threat-to-test frameworks that continuously evolve with new attack vectors, ensuring AI agents remain resilient in real-world enterprise environments?

Do you think AI will ever be able to truly reason like humans, or will it always be advanced pattern matching.

How AI can help us in exploratory testing or risk system or help in testing AI system itself?

How does the “Rapid Threat-to-Test” approach specifically integrate security considerations and testing practices throughout the entire lifecycle of an AI agent?

How does the approach balance rapid testing and deployment with thorough security assessments, particularly in scenarios involving real-time decision-making by AI agents?

What are the key limitations in security testing for LLM ?

How can Ai agents can be used to test AI models of an enterprise , does it generate biased results?

How is rapid threat-to-test superior for identifying and evaluating potential zero-day vulnerabilities within a given system or application?

Usually in automation pipeline shift left security is implemented, but when integrating Ai-Agents how can we protect/secure our Ai-Agents ?

How to evaluate LLM model ?

What are the Key Areas of Security Testing in AI Agents?

Do we have some AI-driven solutions to check security risks in AI agents?

How can teams quickly test AI agents against real-world threats ?

How do you prioritize threats without full security expertise?

![]() Understand where risks emerge in LLM-based agents

Understand where risks emerge in LLM-based agents![]() Apply a fast, practical approach to threat modelling

Apply a fast, practical approach to threat modelling![]() Translate threat insights into stronger test coverage

Translate threat insights into stronger test coverage![]() Don’t miss this session, equip yourself with the mindset and tools to test AI-driven systems with confidence! Register now!

Don’t miss this session, equip yourself with the mindset and tools to test AI-driven systems with confidence! Register now!