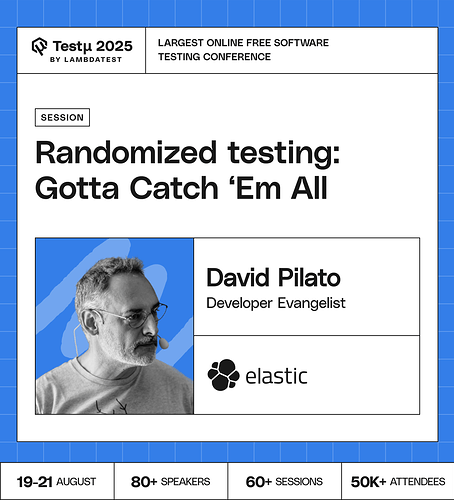

Join David Pilato as he reveals how randomized testing can uncover hidden bugs that deterministic tests often miss.

Discover how introducing randomness into unit and integration tests exposes unexpected edge cases like integer overflows or locale-specific issues that traditional approaches overlook.

Learn how Elasticsearch leverages the RandomizedTesting framework and TestContainers to build stronger, more resilient codebases. After this session, you’ll see the random() function as one of your best allies in testing!

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How do you decide the right level of randomness in tests without making them too unpredictable or hard to reproduce?

Can randomized testing catch types of bugs that traditional testing often misses, and do you have an example from real projects?

How does the RandomizedTesting framework used by Elasticsearch differ from conventional test frameworks in Java?

How do you handle reproducibility with randomized tests? When a test fails with a random value, what’s the best practice to ensure you can reliably reproduce that exact failure for debugging?

In a tight release crunch, which test category is the least risky to scale back: unit, integration, UI, or performance?

When forced to choose between test coverage and delivery speed, which testing layer would you sacrifice first to hit the deadline?

How do you convince a team that a test suite that “regularly fails” is a good thing? Many organizations have a culture where a failing build is seen as a major problem, not a sign of a robust testing strategy.

What is the right balance between deterministic tests that cover known business logic and randomized tests that explore the unknown? Is there a recommended ratio or a way to decide which is more appropriate for a given feature?

The Math.abs() example is a perfect, self-contained edge case. In more complex, stateful applications, how do you prevent randomized tests from generating so much noise that it becomes difficult to identify the root cause of a failure?

What strategies and tools are we employing to log crucial information (like random seeds, input data, system responses) that facilitates debugging and makes sure of reproducibility?

How can the overall impact of randomized testing on our software quality and development velocity be measured?

What types of systems benefit the most from randomized testing?

How can testers build confidence in randomized testing when results may vary run to run what’s the best way to ensure reliability and trust?

Should automated health checkups be treated like preventive medicine—mandatory and frequent—or only when failures appear?

Is there a practical way to combine exploratory testing by humans with AI-based randomness for maximum benefit?

What do you do when your randomized tests become flaky monsters—catch them or release them?

Can randomized testing uncover hidden edge cases that traditional testing always misses?

If you could only use one testing strategy for the rest of your career—would you pick randomized or deterministic testing? Why?

What’s the best way to avoid false confidence when using randomized tests?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now