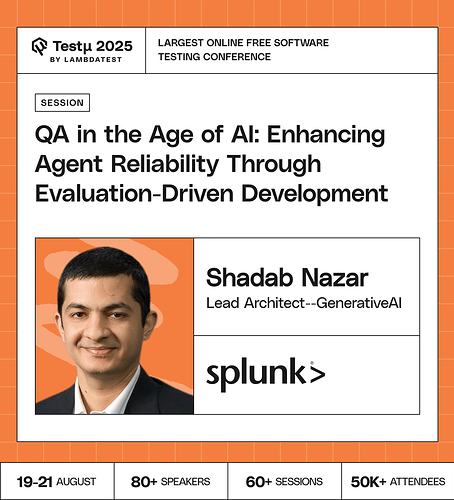

Join Shadab Nazar, Lead Generative AI Architect at Splunk Observability, as he shares a framework for evaluation-driven development to ensure AI agents are reliable, transparent, and trustworthy.

Discover how QA professionals can adapt proven practices, like scenario coverage, automation, and evaluation metric, to the unique challenges of AI agents that are probabilistic and continuously evolving.

Gain insights on real-world tools, techniques, and collaboration models that embed QA into the AI lifecycle, helping teams build stronger, more reliable agent-based systems.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What’s the most important thing testers should focus on to ensure quality in an AI-driven world?

How to determine whether the evaluation metrics and test datasets are representative enough of real-world usage scenarios?

When AI behavior is non-deterministic, what criteria help decide if a failed test indicates a real issue or simply a permissible random outcome?

What approach can be taken to build an evaluation framework for an AI agent or LLM that measures not only its expected capabilities but also its weaknesses, like hallucinations or bias?

How would you integrate automated evaluations into an MLOps pipeline to ensure that every new model version meets a defined quality threshold before deployment?

Will tomorrow’s test engineers need to understand prompt engineering more than test case design?

Could you give an example of a classic QA technique, like boundary value analysis, and explain how you would adapt it to test a modern, probabilistic AI agent?

When a test for a probabilistic AI agent fails, how do you distinguish between a genuine bug versus an acceptable, but undesirable, random output? What does a ‘bug report’ look like in that scenario?

Building diverse and meaningful test datasets is a huge challenge. What is your recommended strategy for generating data for edge cases and potential biases without accidentally encoding those same biases into your evaluation set?

How can we quantify the “irreplaceable” value of human empathy and intuition in a testing landscape increasingly dominated by algorithmic decisions and predictive analytics? How do we keep that line unblurred?

How can we simulate real-world unpredictability in evaluation so that agents don’t fail when exposed to edge cases in production?

What practices can ensure evaluation remains meaningful when agents interact with other agents, not just humans?

How can we track agent reliability as models evolve—without creating bottlenecks in delivery speed?

If performance issues are predicted before code is deployed, will performance engineers shift toward proactive design consulting?

Given the non-deterministic nature of some AI/ML models, how can QA teams design and implement effective regression testing strategies to make sure of consistent and reliable performance when models are retrained or fine-tuned with new data?

How does evaluation-driven development reshape the role of QA in building reliable AI agents?

As the line blurs between human and AI capabilities in testing, what are the key technical competencies that QA professionals need to develop to adapt to this evolving landscape?

How can robust and continuous evaluation frameworks, with feedback loops and quality assurance methodologies, ensure and iteratively improve the trustworthiness, reliability, and safety of AI agents beyond simple performance metrics ?

What are the risks of overfitting evaluation metrics—teaching agents to “pass the test” rather than behave reliably in real scenarios?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now