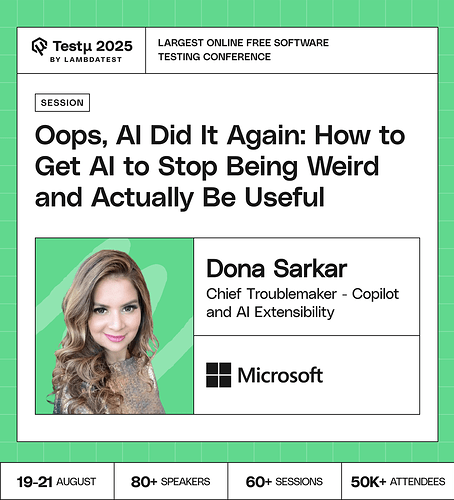

Join Dona Sarkar as she breaks down why AI systems often act weird from hallucinations to nonsense Copilot suggestions and what teams can do to make them truly useful.

Discover real-world strategies for piloting AI responsibly, governing deployments, and instrumenting systems to track accuracy, bias, and agent behavior.

Learn a practical framework to test, supervise, and measure AI before moving from prototype to production, turning AI’s quirks into business value.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What common mistakes do teams make when adopting AI that lead to unreliable or ‘weird’ outputs?

What practical approaches can ensure AI delivers consistent, valuable results in real-world testing?

When an AI system starts producing unexpected or incorrect outputs, what is the most critical initial step in diagnosing the problem?

What is the key first move in investigating the root cause when an AI model starts acting outside of expected boundaries?

Would we trust AI more if it admitted “I don’t know” instead of making stuff up?

When an AI agent “goes off the rails,” what is the most important first step a technical team should take to diagnose the root cause of the “weirdness”?

Looking ahead, what is the one “weird” AI behavior you believe will be the hardest to solve in the next few years?

What concrete metrics or KPIs can teams use to measure whether an AI system is moving from “weird” to “useful” in real-world deployments?

What are some regulations that a company should keep in mind on leveraging AI at a scale?

What are some that you follow?

Where should orgs/teams draw the line between a quirky but ultimately helpful AI and one that is fundamentally unreliable and needs significant rework or even decommissioning?

What are the key differences between testing methodologies suitable for AI prototypes vs. those required for capable, production-ready AI systems?

How can organizations strike the right balance between AI autonomy and human oversight in testing?

What’s the best way to track and debug “weird” but high-value AI outputs without discouraging innovation?

How can teams effectively communicate the tangible business risks associated with “weird” AI behavior and advocate for adequate testing and governance without appearing to hinder innovation?

What are some common organizational or cultural barriers that what hold back the effective implementation of testing, governing, and measuring strategies for AI systems, and how can they be overcome?

What are some of the most critical and perhaps overlooked aspects of measuring AI systems that are essential for assessing its real-world usefulness and avoiding unexpected negative consequences in production?

Do you think AI’s “weirdness” is just a temporary stage, or will it always be part of using generative models?

How can we validate that our tests are effectively verifying system behavior?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now