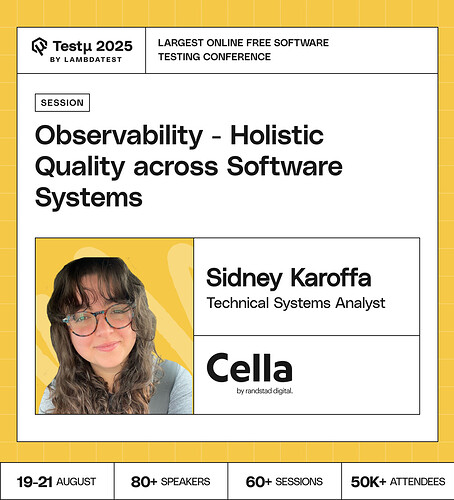

Join Sidney Karoffa as they explore how observability drives holistic quality across software systems.

Discover the fundamentals of observability logs, traces, and metrics and learn how these telemetry types strengthen the software development lifecycle.

Gain practical insights into integrating observability into your organization’s quality systems and building stronger collaboration between developers and testers to ensure resilient, high-quality software.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How can observability practices help QA teams detect subtle issues that traditional testing might miss?

How does observability help catch bugs traditional testing misses?

How does observability enable a more comprehensive understanding of system behavior compared to traditional monitoring practices?

Which metrics are most important for monitoring system quality?

What advantages does observability bring over standard monitoring in achieving end-to-end visibility of system quality?

For a QA team that has no experience with observability, what’s the single most impactful first step we can take tomorrow to start incorporating this into our testing process?

How do you recommend introducing observability into an organization where the culture traditionally views quality as a pre-production gate rather than a continuous, post-production activity?

In the world of observability we generally get overwhelmed, how do we pick the right metrics to trust, what are key aspects to it?

You covered logs, traces, and metrics. If we only have the resources to master one of these telemetry types at first, which one typically provides the most value for testers just starting out?

Could you give a specific example of how a tester would use observability data from a production environment to write a better, more effective test case for a feature that is still in development?

How do you bake observability into an existing agile workflow without it becoming just another ceremony or source of friction for the development team?

What’s the best way to approach developers about collaborating with observability data, especially if they might be sensitive to testers “looking over their shoulder” at production logs and metrics?

Does this shift in responsibility blur the lines between a QA Engineer and a Site Reliability Engineer (SRE)? Where do you draw the line between using observability for quality vs. for operational stability?

How much coding or scripting knowledge is realistically required for a tester to become proficient with modern observability tools?

How does observability go beyond traditional monitoring to provide a holistic view of system quality?

How do you balance functional correctness with non-functional aspects like security, performance, and usability?

How do we measure “quality” through observability beyond uptime and error rates?

How can orgs effectively measure the ROI of observability investments when benefits like reduced downtime and improved customer satisfaction can be difficult to quantify and attribute directly to specific initiatives?

How can observability evolve to move beyond troubleshooting and into a more proactive and predictive role, allowing teams to anticipate and mitigate issues before they impact users or business outcomes?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now