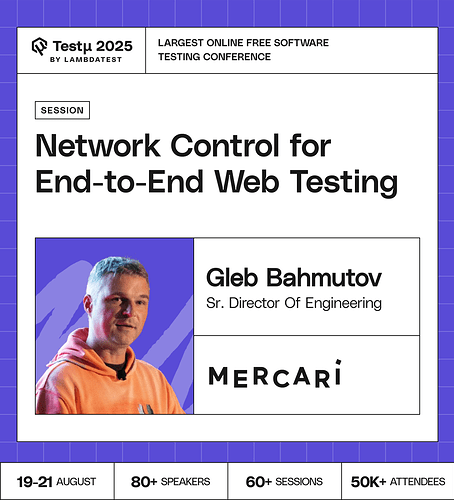

Join Gleb Bahmutov, Senior Director of Engineering at Mercari US, as he shares practical tips for controlling network calls in end-to-end web testing. Using Cypress and the “record–replay–inspect” pattern, he demonstrates how to make tests reliable, realistic, and easy to debug.

Discover how recording real API calls can improve test accuracy and help you catch issues faster.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How can “record - replay - inspect” pattern for API mocking help reduce flakiness in E2E tests while still ensuring realistic coverage of critical user flows?

How mercari is utilizing AI in testing?

In end-to-end web testing, what specific network-level controls, (such as bandwidth throttling, latency injection, packet loss simulation) are key to replicating real-world scenarios?

In E2E testing, what are the most effective ways to manage test data that requires highly specific network conditions or behaviour.

How can network control be effectively integrated into CI/CD pipelines and automated testing frameworks to streamline E2E testing workflows, enable faster feedback loops, and improve overall development?

What’s the biggest advantage of using network control in web testing compared to traditional methods?

How can AI help distinguish between a true product bug and a flaky test caused by network instability during automation runs?

What tools and techniques are most effective for controlling network conditions like latency, bandwidth, and packet loss during E2E testing?

What’s the biggest advantage of using network control in web testing compared to traditional methods?

What strategies do you use to debug failures that occur only when using replayed network calls versus live API calls?

How can advanced network control in end-to-end web testing be leveraged to simulate real-world conditions like latency, bandwidth throttling, or packet loss—while integrating AI-driven insights to predict performance bottlenecks and optimize user exp

Would detect when a test has changed from the previous run, and run in that case the real queries to re-cache?

Think of the “record → replay → inspect” pattern like having a safety net for your API calls during end-to-end tests. First, you record real API responses, so you’re working with realistic data. Then, you replay those responses every time you run your tests, which keeps things consistent and prevents random failures caused by network hiccups or a backend acting up. Finally, you inspect the responses to make sure all the important user flows are still working as expected. Basically, it gives you the best of both worlds: stable tests without losing touch with real-world scenarios.

At Mercari, AI isn’t just a buzzword, it’s actually making testing way smarter and more efficient. They use it to automatically generate test cases, spot those pesky flaky tests that randomly fail, and figure out which scenarios are the riskiest so they can focus on what matters most. Basically, AI helps the team predict where things might break, which not only saves a ton of manual effort but also makes their testing process much faster and more reliable.

When it comes to end-to-end web testing, a few network-level tricks can make a huge difference. Things like bandwidth throttling, latency injection, and simulating packet loss are super important. Why? Because they let you mimic real-world conditions, like a user on a slow mobile network or a spotty Wi-Fi connection. Testing under these scenarios helps you catch performance hiccups and reliability issues that would otherwise fly under the radar in a typical, “perfect” test environment.

Ah, when it comes to end-to-end testing and handling test data that depends on specific network conditions, it’s really about mimicking the real-world network quirks your users might face. A few practical approaches I’ve found super useful are:

-

Bandwidth throttling – basically, slowing down the network to see how your app behaves when the internet is sluggish or congested.

-

Latency injection – adding delays to responses to check if your app can handle waiting for data without breaking.

-

Packet loss simulation – dropping some packets on purpose to make sure your system can handle lost or corrupted data gracefully.

-

Jitter simulation – introducing variable delays so you can test how stable your app is under inconsistent network conditions.

Think of it like stress-testing your app’s “network fitness.” If it can survive these scenarios, it’s much more reliable for real users.

To make network control really work in your CI/CD pipelines and automated tests, think of it like creating a mini “sandbox” for your apps. You can set up these sandboxed environments with specific network rules so your tests run under predictable conditions.

Another handy trick is to use recorded API responses. This lets you mimic complex backend scenarios without relying on the real backend every time, which makes your tests faster and more reliable.

And finally, keep environment-specific configurations organized. That way, your tests are reproducible no matter where or when they run, giving you consistent feedback and helping your development flow stay smooth.

The biggest advantage of using network control in web testing is that it lets you recreate real-world network conditions on demand. Traditional testing often assumes everything will run perfectly, which isn’t how users experience your app. By controlling the network, you can see exactly how your site behaves under slow connections, timeouts, or unstable networks, and the best part is you can repeat these tests reliably. It helps catch tricky bugs that would otherwise slip through unnoticed.

Hey EveryOne👋

Think of AI like a smart assistant that keeps an eye on all your test runs. It can look back at past results and notice patterns, like, “Hmm, this test always fails when the network slows down, but passes fine under normal conditions.” When it spots something like that, it can call out that the failure is likely due to network issues rather than an actual bug in your product. So instead of scratching your head over every failed test, AI helps separate real product problems from those random network hiccups that tend to sneak in during automation.

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now