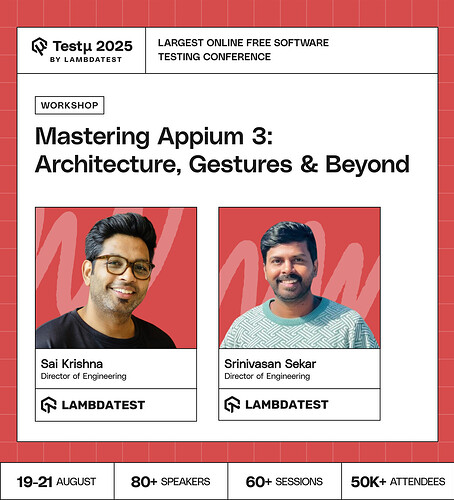

Join Sai Krishna & Srinivasan Sekar as they dive into mastering Appium 3 with its modular, plugin-friendly architecture.

Explore how Appium works under the hood, decode server logs like a pro, and build advanced gestures using the W3C Actions API.

Learn to integrate Appium tests with Model Context Protocol (MCP) for intelligent, context-aware automation at scale.

Walk away with reusable scripts, hands-on examples, and the confidence to bring Appium 3 into modern testing pipelines.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

1 Like

What’s the most useful new feature in Appium 3 that testers should focus on first?

How is Appium expected to evolve to meet the growing challenges of testing apps that integrate with emerging technologies like IoT ecosystems and high-speed 5G networks?

What are the key considerations and challenges in integrating Appium tests into a CI/CD pipeline?

How can AI assist in maintaining Appium scripts across frequent UI/UX changes without manual updates?

With the new modular architecture, what is the performance impact of loading multiple drivers and plugins? Does it significantly affect server startup time or test execution speed?

How can AI and ML be best integrated with Appium to generate more intelligent test cases, predict potential defects, and reduce test maintenance efforts?

Scenarios where integrating Appium with Model Context Protocol (MCP) provides the most value?

What are a few of the most important strategies and best practices for scaling Appium test execution across multiple devices and platforms?

How is Appium likely to evolve to support the increasing complexity of testing applications interacting with IoT devices, 5G networks, and other emerging mobile tech?

How does Appium 3 architecture protect against session hijacking or unauthorized connections?

Can AI help automatically detect flaky gestures or interactions in mobile apps and suggest robust alternatives?

How can Appium be used with relevant tools and techniques to make sure of both optimal performance and accessibility of mobile apps?

How can Appium’s element location strategies be made more resilient to dynamic UI changes?

Can you explain the significance of the W3C WebDriver Protocol in Appium 3’s architecture?

How does MCP handle dynamic context? For example, if the app’s state changes unexpectedly during a test, can the MCP model adapt in real-time to guide the next steps intelligently?

Do you think AI-powered gesture recognition will replace manual script writing in the next few years—or is that hype?

Is Appium 3 more dev friendly or QA friendly or both?

Is it possible for AI to suggest the best combination of gestures and interactions to maximize coverage in complex apps?

Do you plan to have a solution to get all elements from the Appium inspector remotely by API (and AI client) or by AI itself to create a page structure on the required language/framework?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now