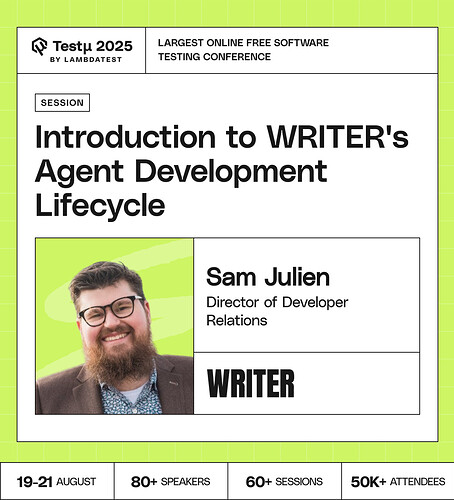

Join Sam Julien as he introduces WRITER’s Agent Development Lifecycle (ADLC), a modern framework built to replace the traditional SDLC for the age of agentic AI.

Discover how ADLC redefines software engineering by focusing on outcome-driven, adaptive, and non-deterministic agents.

Learn practical strategies for shifting your mindset, navigating business vs. production challenges, and moving beyond demos to enterprise-scale AI agents that deliver measurable impact.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How do you ensure AI agents are reliable at scale?

What are the biggest challenges teams face when transitioning from traditional SDLC to an Agent Development Lifecycle, and how can they overcome them?

What unique considerations come into play when establishing scope and requirements for an intelligent agent compared to a non-AI system?

How should the scoping process for an AI-driven agent be approached compared to that of a traditional app?

You’ve positioned the Agent Development Lifecycle (ADLC) as a necessary successor to the SDLC. Could you elaborate on the single most critical “first principle” that distinguishes the ADLC, and why traditional agile or DevOps practices fall short?

How does the role of the Product Manager change within the ADLC? How do you define a product roadmap and write user stories for a system that is outcome-driven rather than feature-driven?

How does the ADLC formally address the non-deterministic nature of agents? Specifically, what replaces traditional concepts like requirements definition, acceptance criteria, and regression testing when an agent’s behavior is designed to be adaptive?

Could you describe what a mature toolchain or tech stack looks like for the ADLC? What kinds of tools are essential for the continuous monitoring, evaluation, and adaptation phases that don’t typically exist in a standard CI/CD pipeline?

How is QE contributing to a more customer-centric approach in financial services by focusing on user experience, performance, and security throughout the development lifecycle?

What testing strategies should be employed to ensure the reliability and security of these complex multi-agent systems?

Does Mutlimodal datasets change Scaling of Agents?

Which is harder—testing deterministic apps (SDLC) or adaptive agents (ADLC)?

How will the shift from writing code to verifying and validating code in an ADLC paradigm impact developer toolchains?

What more interesting to understand is say if the testing involves hardware, like involves camera to validate data, then how ill AgentiC CI be helpful here?

What metrics and key performance indicators are most critical for measuring the impact and effectiveness of QE initiatives in achieving business objectives?

What mechanisms ensure data privacy and security when agents continuously learn from enterprise data?

If you had to add one new phase to ADLC that doesn’t exist in SDLC, what would it be?

How should cross-functional teams (AI engineers, domain experts, testers) collaborate under ADLC?

What testing strategies can we employ to ensure the reliability and security of these complex multi-agent systems?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now