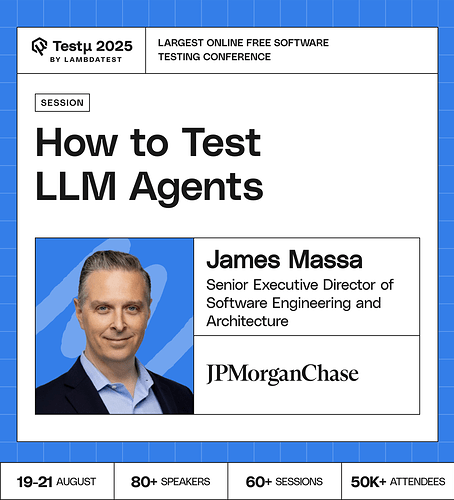

Join James Massa, Senior Executive Director at JPMorgan Chase, as he walks through practical strategies for testing LLM agents in production. Learn how to measure trust, detect hallucinations, validate multi-step workflows, and ensure high-quality data for reliable agent performance.

Discover a framework to test agent orchestration, apply Zero Trust principles to AI data, and evaluate agent behavior with rigor and confidence.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

Since LLMs learn from unstructured data, how can QA ensure that poor-quality documents or prompts don’t silently degrade system performance over time?

What metrics are used to quantify the effectiveness and efficiency of tool selection and parameter generation by LLM agents?

Could LLM agents evolve to be ‘self-auditing’, testing their own reasoning steps before responding, and what trade-offs might that create between trust and speed?

What testing strategies best safeguard LLM agents against generating unsafe, biased, or policy-violating responses?

What methods are (or should be) employed to identify and attribute failures within complex LLM agent workflows?

Through testing, how how can the safety and alignment of LLM agents be ensured, specifically guarding against the generation of harmful, biased, or non-compliant outputs?

How do you depict the QA engineer in the loop in 2026? What skills will be highest priority and which skills won’t be necessary? Ho do we stay interesting for businesses?

Do you think people would trust AI more if it showed its step-by-step reasoning (like explaining how it got to an answer), even if that makes it slower? Or is most folks happy with quick, magic box answers as long as they work?

What metrics are most effective in evaluating an LLM agent: accuracy, coherence, relevance, safety, or something else?

How do you balance scripted test cases with exploratory testing for agents?

My question is about testing for data exfiltration risks. How do we design tests for a scenario where a trusted agent, which has legitimate read access to a database, is hijacked via prompt injection to send that data to an unauthorized external dest

If you had to prioritize only three areas of testing for LLMs in production, what would they be?

Should the fact-checking process for LLM data verification ever been conducted by other LLMs or always by human testers?

Can LLMs learn to self-check their reasoning before replying, and what compromises would that create between accuracy and efficiency?

Whats the most underrated risk of using AI in QA that no one is talking about?

What methods can detect and prevent hallucinations in responses during testing?

Should LLM agents be tested like deterministic code, or do we need a probabilistic approach?

Should humans remain in the loop for testing LLM agents, and if so, at what checkpoints?

What are the most effective ways to detect hallucinations before they reach end users?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now