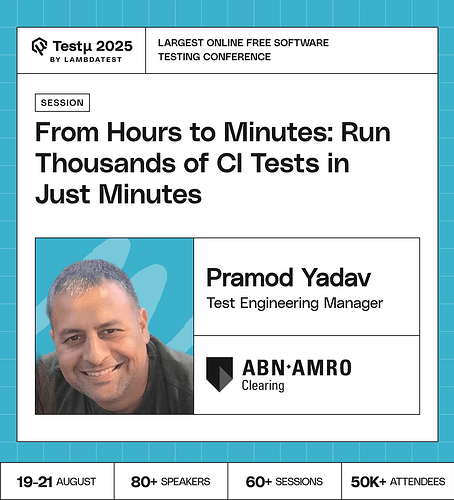

Join Pramod Yadav as he reveals how to cut CI test execution from hours to just minutes with smart orchestration.

Discover how to dynamically scale Playwright test runners based on load, optimize parallelism, and keep execution times predictable no matter the test volume.

Learn practical strategies to run reliable system tests at the PR level, reduce false positives, and ship faster with higher confidence without overspending on infrastructure.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What are the key strategies or tools that enable reducing CI test execution from hours to minutes without compromising quality?

How do you balance speed with reliability when scaling thousands of tests in a CI/CD pipeline?

When does the benefit of adding more runners diminish to the point that the trade-off isn’t worth it?

How do you know when increasing the number of parallel runners reaches a plateau in efficiency and becomes counterproductive?

Is there a point of diminishing returns where adding more parallel runners stops decreasing the total run time and just increases cost and complexity?

What is the most common “false positive” or flaky test issue that arises from this high level of parallelism, and how do you recommend teams mitigate it?

What are the risks and benefits of fully automating testing processes with AI-driven tools?

What mechanisms are in place to balance cloud compute cost?

What are the key pain points in parallel execution and how can you achieve 100 percent parallel runs considering resources and infra.

How does this approach handle flaky tests and prevent them from skewing the runner allocation logic or causing unnecessary re-runs, and are there specific mechanisms to detect and quarantine such tests?

How do you monitor and visualize test execution time across thousands of tests?

Does speeding up CI actually improve developer productivity, or just mask deeper testing issues?

How does this orchestration system learn and adapt to changing test loads and execution patterns over time to maintain optimal runner allocation?

Are we talking about UI tests or API tests because thousands of tests in such a short amount of time doesn’t sound realistic.

What’s the considerations and potential trade-offs in implementing such a dynamic scaling and orchestration system, encompassing aspects like resource overhead, cost implications, and integration with existing CI/CD pipelines and infrastructure?

What role should QA professionals play as AI takes on more responsibilities in automation?

How are potential inconsistencies between local and CI environments addressed to ensure accurate estimations and avoid false positives during the dynamic scaling process?

Beyond Playwright, what other testing frameworks (unit, integration, performance, and so on.) can benefit most from this dynamic scaling and orchestration approach?

What safeguards can be implemented to prevent AI-driven testing from propagating errors or biases?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now