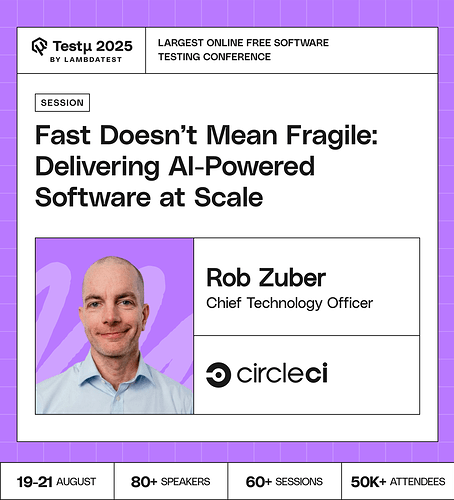

Join Rob Zuber, CTO at CircleCI, as he explores delivering AI-powered software at scale. Learn how to move beyond manual oversight and establish disciplined approaches that balance speed, quality, and confidence across the entire AI-enabled development lifecycle.

Discover how to create feedback loops, build measurable trust in AI applications, and maintain process rigor while shipping fast in an evolving landscape.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How can QA teams validate the quality of AI-generated outputs when the same prompt can produce different results each time?

With Increase usage of Github Actions, How it will impact CircleCI?

What technical debt considerations are unique to scaling AI systems, and what’s the best approach for organizations to proactively manage them?

As teams race to ship AI-powered features faster, how can we leverage stochastic or AI-driven testing to ensure robustness — so that speed doesn’t turn into fragility, especially when dealing with non-deterministic model behaviors?

Beyond infrastructure, what are the most important organizational and cultural changes required to foster a “fast but not fragile” mindset for delivering AI at scale?

What techniques are most effective for ensuring model fairness and robustness remain intact as the system serves millions of users?

What automated alerting and rollback mechanisms are essential for maintaining stability during rapid iteration and deployment?

What’s the optimal strategy for managing stateful vs. stateless components in large-scale ML inference pipelines?

Can moving fast with AI ever mean better quality, or is speed always a trade-off?

How do you balance speed and quality when shipping AI-generated code in production?

Given the non-deterministic nature of AI model outputs, how do you balance the speed of AI development and deployment with the need for robust quality assurance and testing?

What are the biggest challenges organizations face when attempting to smoothly scale AI-powered solutions beyond initial proof-of-concept projects?

Since there’s often unpredictability and potential for model drift in AI systems, what specific practices can be implemented to establish and maintain measurable trust in AI-enabled applications throughout their lifecycle?

What’s the biggest challenge in scaling AI-powered software without losing stability?

How can teams balance speed and stability to deliver AI-powered software at scale without compromising quality or resilience?

Do you see Zero-UI ever fully overtaking traditional interfaces, or will both continue to exist side by side?

Do you think Zero-UI will become the standard, or will it always coexist with traditional UIs?

In my opinion, we can use probabilistic and statistical testing, defining acceptable ranges or patterns rather than exact matches. You can also combine multiple iterations, reference outputs, anomaly detection, and human spot checks. To detect drift or repeated errors, track trends over time.

GitHub Actions integrates natively with repositories, reducing the need for external CI/CD tools. CircleCI may remain relevant for complex pipelines, multi-cloud workflows, or legacy systems, but some teams may consolidate to Actions for simplicity and reduced operational overhead.

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now