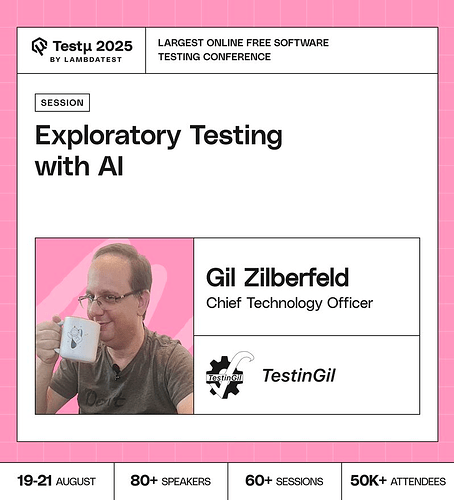

Join Gil Zilberfeld, CTO at TestinGil, as he breaks down the core components of exploratory testing and demonstrates how AI can enhance every stage, from charter creation and test case suggestions to prioritization and bug reporting.

Discover how AI-driven tools can streamline documentation, boost test efficiency, and help manage workloads more effectively.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

Since AI often learns from existing patterns, how do we prevent it from reinforcing blind spots?

Exploratory testing relies heavily on human intuition and curiosity. How can AI truly augment this process without limiting creativity?

How AI will change exploratory testing ?

How do you balance using AI for exploratory testing without losing the tester’s own intuition?

If AI learns from existing systems and behaviors, is there a danger it will only explore the expected and miss the unexpected?

AI works on probabilities and predictions. What if an AI system decides not to explore an edge case that later turns into a critical defect in production?

How can AI enhance exploratory testing by not just detecting issues, but also predicting user behavior and uncovering hidden risks at scale?

How would you design an AI-driven exploratory testing framework that not only discovers unknown defects dynamically but also learns from previous sessions to continuously improve test coverage and risk detection?

Exploration is often playful and experimental. Can AI ever replicate this playfulness that leads human to discover the hidden issues?

How do you determine which exploratory testing tasks are safe to delegate to AI without losing context or insight?

With AI generating test cases (for both API and UI exploratory testing), what metrics/criteria can test engineers use to evaluate the quality, relevance, and coverage of these AI-generated suggestions?

In exploratory testing, how will AI assistance streamline the process of documenting findings, sessions, and bug reports while maintaining accuracy, clarity, and consistency?

What are your thoughts on how to leverage AI for with regard to exploratory testing outside of using prompts to help identify the test coverage/ charters?

What percent of your effort would you say is utilized confirming that AI is producing correct results (and not hallucinating)?

Is there something similar available for mobile UI testing?

What’s the ethical considerations and potential biases when using AI to augment or guide exploratory testing efforts? How would you mitigate those risks and enable fair and representative test coverage?

Which agent/tool used for creating this cases? is it chatGPT only?

That’s a really good question. The thing with AI is, it’s only as smart as the data you give it. If you only feed it the same type of scenarios, it’ll keep reinforcing the same patterns and completely miss the odd cases. What I’ve found helpful is to mix things up: give it a wide range of data, including those “weird” outlier situations that don’t happen often but are super important.

And honestly, I never rely on AI alone. It’s great at spotting patterns fast, but it doesn’t have the intuition we humans bring. So I use AI as a partner, it helps me cover a lot of ground, and I use my own tester’s instinct to catch the blind spots it can’t see.

Hey All🙋♂️

That’s a great question! Exploratory testing is all about human intuition, curiosity, and those “aha!” moments that only testers can bring to the table. What AI does here isn’t about replacing that creativity, it’s more like having a smart testing buddy by your side.

For example, AI can surface interesting edge cases you might not have thought about, point out potential risk areas, or even throw in prompts to spark new testing ideas. But the real decisions, the creative jumps, the prioritization, the “what if I try this crazy scenario”, still come from us, the testers.

So think of AI as your assistant that helps you cover more ground faster, while you stay in the driver’s seat guiding the exploration. It doesn’t take away creativity, it frees you up to use it even more.

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now