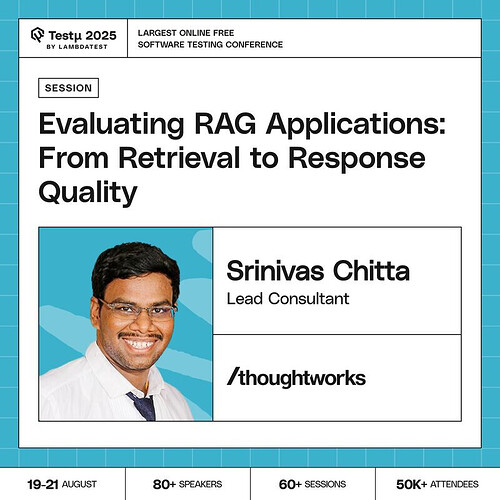

Join Srinivas Chitta, Lead Consultant at Thoughtworks, as he breaks down how to effectively evaluate RAG (Retrieval-Augmented Generation) applications.

If you’re building or managing #GenAISolutions, this session is packed with practical strategies to assess accuracy, reliability, and real user value, from pipeline setup to confident deployment.

[Grab your free pass now](LinkedIn)

[Grab your free pass now](LinkedIn)

How can we ensure response accuracy and reduce hallucinations when evaluating end-to-end RAG systems?

How does RAGAS handle the evaluation of responses where the “ground truth” is ambiguous or subjective, which is common in many real-world enterprise use cases?

What’s more important in a RAG pipeline high recall in retrieval or precision in generation?

What are the most practical metrics to evaluate the quality of retrieval in RAG applications beyond just relevance?

Metrics to evaluate the quality of retrieval?

How do you recommend teams set up an automated evaluation loop using RAGAS within a CI/CD pipeline to continuously monitor and prevent regressions in their RAG application?

What are the challenges in implementing a RAG system?

Would you trust a RAG app to answer compliance-related questions without human review?

What’s worse in a RAG app, irrelevant retrieval or confidently wrong responses?

How can test datasets be made representative of real-world user queries and edge cases to effectively evaluate both retrieval and generation quality?

How can the evaluation of the retrieval and generation components be handled separately to pinpoint performance bottlenecks more effectively?

How can automated testing pipelines and continuous integration be used to streamline the RAG evaluation process and enable faster iterations?

How important it is to use RAG?

How do you measure if your RAG system is retrieving the right documents and not just relevant-looking ones?

What metrics best capture the quality of document retrieval in RAG applications?

Have you ever seen poor retrieval completely distort the final AI response?

Are you optimizing for retrieval precision or coverage, and what trade-offs are you willing to live with?

What are the frameworks to evaluate RAG applications?

How do you balance retrieval granularity with latency in production RAG?

![]() [Grab your free pass now](LinkedIn)

[Grab your free pass now](LinkedIn)