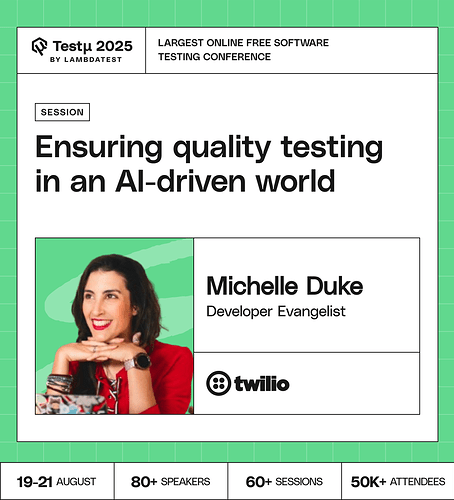

Join Michelle Duke as she explores Ensuring Quality Testing in an AI-driven World and shows you how to adapt to the rapidly evolving landscape of AI-powered testing.

Discover how AI-driven automation, predictive analytics, and intelligent quality assurance are reshaping software testing, while human oversight, empathy, and intuition remain critical.

Learn practical strategies for effectively using AI in testing, balancing automation with critical thinking, and preparing for the future of quality assurance where software impacts real-world experiences.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What’s the most important thing testers should focus on to ensure quality in an AI-driven world?

How should validation practices evolve when traditional pass/fail expectations don’t fully capture the variability in test outcomes?

How can we ensure that AI-driven testing tools accurately interpret human intent?

What can individuals in dev or SDET capacity learn to upscale to keep up with the AI Gold Rush?

As AI becomes more convincing at mimicking human intent, what dangers arise if we start trusting it too much and stop applying our own scrutiny?

Could you share a real-world example of a bug or a user experience issue that was found specifically because of human empathy, which an AI testing tool likely would have missed?

As AI gets better at simulating an understanding of human intent, what is the biggest risk of us becoming overconfident in its abilities and slowly abdicating our own critical judgment?

How do you recommend a team create tangible, measurable tests for abstract concepts like ‘fairness’ or ‘ethical considerations’ in an AI system? What does that test case look like?

Unlike traditional apps, AI outputs are often probabilistic — how should QA teams define pass/fail criteria for AI-driven systems?

What frameworks and best practices are emerging to address accountability and liability when AI-driven testing systems fail to detect critical flaws in software that impacts real-world experiences?

As “self-testing” and “self-healing” AI systems emerge, how can we develop testing methodologies to validate the effectiveness of these autonomous capabilities, and make sure that they are genuinely improving software quality?

How can organizations effectively balance the efficiency and scalability of AI-driven testing automation with the necessity of human critical thinking and empathetic understanding?

What architectural considerations and design principles are key for building AI-driven test automation frameworks that are efficient, scalable and resilient to changes in application code, UI elements, and data patterns?

How can we prevent traditional test metrics (coverage, pass rate) from giving a false sense of confidence in AI-driven systems?

What differences do you see between UI Testing and Data Testing through AI? How does the Agile vs Waterfall methodology of each play into the response?

What practices are mandatory in the growing AI and agentic world ? How would testing differ from a traditional software testing?

How do we test AI systems when the same input can lead to different outputs each time?

What does a “test oracle” look like in AI, when there is no single correct answer?

How do we measure the quality of AI decisions beyond accuracy - for example, fairness, explainability, or trust?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now