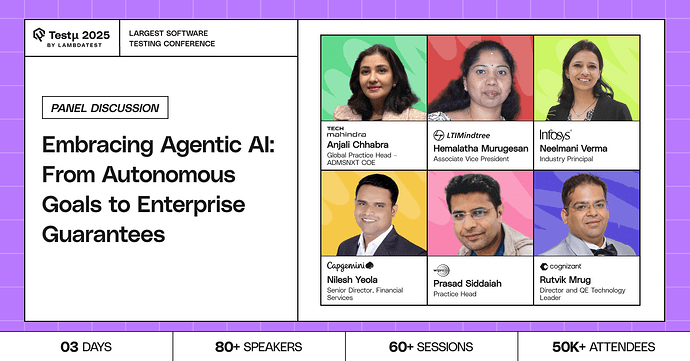

Join leading Quality Engineering experts Anjali Chhabra, Hemalatha Murugesan, Neelmani Verma, Nilesh Yeola, Prasad Siddaiah, and Rutvik Mrug as they explore how Agentic AI is reshaping the future of software development and assurance.

Discover how autonomous systems accelerate innovation, reduce human error, and enhance product quality by driving decisions and actions without manual intervention.

Learn strategies to integrate Agentic AI into enterprise testing pipelines, balance automation with control, and ensure reliability in critical software functions.

Uncover how Agentic AI is enabling enterprise-wide transformation and delivering competitive advantage through faster, smarter engineering.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How can organizations ensure their test data and environments are representative enough to validate agentic AI performance in real-world use cases?

What are the practical steps to move from experimental agentic AI use cases to enterprise-grade guarantees in production?

What’s the biggest risk companies face when moving from autonomous AI goals to enterprise-scale guarantees?

How can the autonomous decision-making capabilities of Agentic AI systems be validated, especially when their actions are non-deterministic and evolve over time?

How can organizations best strike a balance between the benefits of Agentic AI’s autonomy with the need for human oversight and control in critical software functions?

How can QA and governance teams work alongside autonomous AI agents?

How do we go from experimentation to guarantees in LLM agent design?

Aside from traditional accuracy measures, what benchmarking strategies and metrics are most suitable for evaluating the performance and effectiveness of agentic AI systems in a production environment?

How can organizations balance the autonomy of AI agents with the need for human oversight to maintain accountability in quality assurance?

What are the main considerations and challenges in creating comprehensive test data and environments that adequately represent real-world scenarios for agentic AI?

As work decisions become more autonomous, what is the future role of the human QE professional? What skills should they be developing now to stay relevant?

What’s your biggest concern with deploying autonomous AI at scale?

What is the most significant technical challenge when integrating autonomous AI agents with existing complex, legacy systems and testing frameworks?

What strategies can orgs employ to manage the data quality and governance requirements for agentic AI, considering the vast and potentially disparate data sources they draw from?

RCA agents in DATADOG and fixing them in prod environment and monitoring. Build vs Buy - What has been more effective given such a fast-paced landscape change?

Does Agentic AI handle unexpected failures, edge cases, or anomalies in testing scenarios?

What are the critical factors in designing agentic AI architectures that prioritize security, privacy, and compliance while enabling autonomous operations?

Crawl, Walk, or Run, where do you place your team in adopting AI-powered testing?

How can orgs establish clear lines of accountability and responsibility for its actions and decisions, especially in the event of unintended consequences or errors?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now