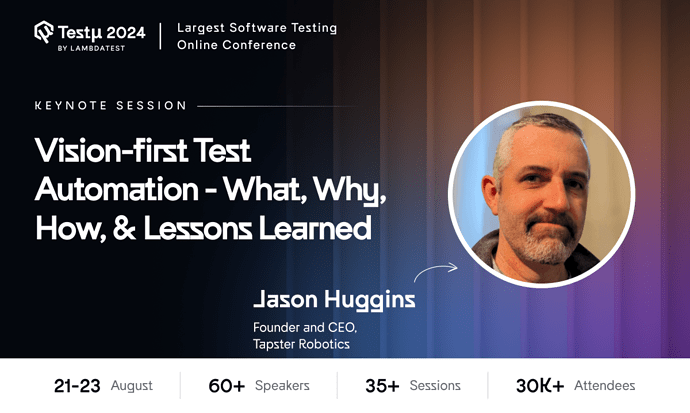

Join us for an insightful talk on Vision-first Test Automation with Jason Huggins, Founder & CEO of Tapster Robotics. Learn the what, why, how, and key lessons learned in this groundbreaking approach to testing.

Jump into the live session by Registering now!

Jump into the live session by Registering now!

Got questions? Drop them in the thread below and get them answered live!

Hi there,

If you couldn’t catch the session live, don’t worry! You can watch the recording here:

Here are some Q&As from the session!

How does vision-first automation handle accessibility and cross-device testing?

Jason: Vision-first automation utilizes visual recognition to test the appearance and functionality of applications across different devices and screen sizes. This approach ensures that accessibility features are properly implemented and verifies that the UI remains consistent and functional across various platforms and configurations.

Why is a vision-first approach sometimes necessary?

Jason: A vision-first approach is necessary when dealing with complex user interfaces that involve dynamic content and visual elements. It helps ensure that the application behaves as expected from a user’s perspective, regardless of device or screen size, and provides a reliable method for detecting visual discrepancies and accessibility issues.

Now, let’s see some of the unanswered questions!

Can you share real-world examples or case studies where vision-first test automation has proven successful?

Which tool is more sufficient to test automation?

How does the vision-first approach compare to more traditional methods in terms of effectiveness and efficiency?

How difficult is it to test smartphone cameras used in mobile AR/VR and other advanced sensory applications?

How can we use this knowledge of automating robots to real world scenario , start a company in our home countries ?

Could you please list out some of the tools or resources for automotive camera/sensors testing.

What kind of AI models would you recommend for use in this kind of automated testing - open source, 1st party, 3rd party, etc.?

What challenges or limitations have you encountered with vision-first test automation, and how have you addressed them?

Can you share a specific case where vision-first test automation significantly improved the testing process?

Isn’t appium trying to do the same thing but for web testing ?

How computer vision is more useful??

Is there any tool except appium which nowadays demand for mobile automation

How could AI tools and processes help with the computer vision and other testing automation processes that could be “baked in” to the DIY-style framework shown earlier?

Which programming language is in very much demand for automation testing Java or Python?

In which areas of the industry the development of robotics is currently the most dynamic?

Are there apps that are more of a challenge for this automated/robotics-assisted testing? What adaptations to the process would you recommend for complex or challenging apps such as those?

![]()

![]() Jump into the live session by Registering now!

Jump into the live session by Registering now!![]()