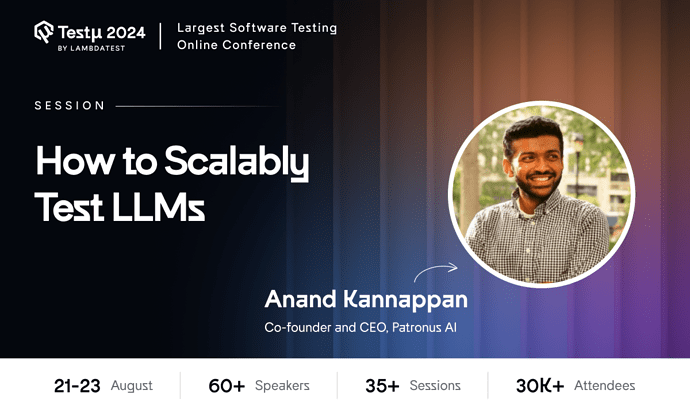

Anand Kannappan will share scalable testing methods for LLMs.

Learn about the limitations of intrinsic evaluation metrics and the unreliability of open-source benchmarks for measuring AI progress.

Not registered yet? Don’t miss out—secure your free tickets and register now.

Already registered? Share your questions in the thread below

Hi there,

If you couldn’t catch the session live, don’t worry! You can watch the recording here:

Additionally, we’ve got you covered with a detailed session blog:

Here are some of the Q&As from this session:

What would be the challenging issues with costs facing the scalability of LLM due to the nature of them needing allot of space server & heavy use of graphics train, re-train and our role as QA to play ?

Anand Kannappan: Scaling large language models (LLMs) involves high costs related to server space, computational resources, and graphics processing units (GPUs). QA plays a critical role in ensuring that these resources are used efficiently by validating model performance, identifying potential inefficiencies, and optimizing testing processes to balance cost and performance.

How do you ensure that LLMs maintain ethical standards and avoid biases when tested across large datasets and varied user inputs?

Anand Kannappan: To ensure ethical standards and avoid biases, use diverse and representative datasets, implement bias detection and mitigation strategies, and adhere to ethical guidelines in AI development. Regularly audit models for fairness and inclusivity, and involve a diverse team in the development and testing processes to address potential biases.

Will an Agentic Rag System produce a better result than a Modular Rag?

Anand Kannappan: An Agentic RAG (Retrieval-Augmented Generation) System can offer benefits like better integration and adaptability by leveraging a single cohesive system. However, a Modular RAG provides more control and customization by allowing different modules to be independently managed and optimized. The choice between them depends on specific use cases and requirements.

Is there any specific model that goes well with RAG or any particular framework like Langchain or Llama Index that suits a RAG system

Anand Kannappan: Frameworks like Langchain and Llama Index are well-suited for RAG systems due to their flexibility and modularity. These frameworks facilitate the integration of retrieval mechanisms with generation models, allowing for better handling of complex queries and improving overall system performance.

What are some of the measures used for improving the accuracy of the LLMs (this also includes minimizing false positives/negatives, edge case execution, and more).

Anand Kannappan: Measures to enhance LLM accuracy include fine-tuning the model with high-quality, domain-specific data, using advanced model architectures like transformers, and conducting thorough error analysis to identify and address sources of false positives and negatives. Continuous monitoring and iterative improvements also play a key role in enhancing accuracy.

What kind of learning models would you recommend leveraging for improving the accuracy of the LLMs

Anand Kannappan: For improving LLM accuracy, consider leveraging models with attention mechanisms, such as transformers and BERT. Transfer learning can also be beneficial, where pre-trained models are fine-tuned on specific tasks or domains. Ensemble methods, combining multiple models, can further enhance performance and accuracy.

Here are some unanswered questions that were asked in the session:

What metrics should testers use to verify the performance of LLMs (such as latency, accuracy, throughput)?

How can testers make sure that the testing process can scale along with the complexity and size of the LLM?

How to create test cases to measure LLM performance?

How to and what factors are considered for testing LLM / bot?

For testing, how can LLMs be systematically identified and categorized in terms of error production?

What are the best practices for continuously testing and monitoring LLMs in production environments?

How do you manage computational resources and ensure that testing processes remain efficient and cost-effective as model size and complexity grow?

To scale, automate as much of the testing process as possible using tools capable of handling parallel execution across multiple nodes. Regularly update the test suite as the model evolves and becomes more complex, ensuring that edge cases and larger data sets are included.

Start by defining key scenarios based on the model’s intended use (e.g., answering questions, generating text). Test cases should cover:

-

Input diversity: Test various types of input to gauge how well the LLM generalizes.

-

Edge cases: Create inputs that challenge the model’s understanding or logic.

-

Performance benchmarking: Measure how well the model maintains speed and accuracy under load.

Errors can be categorized into:

-

Factual errors: When the LLM generates incorrect or misleading information.

-

Bias-related errors: When the output reflects unintended biases.

-

Grammatical or semantic errors: Language inconsistencies or misinterpretations.

-

Contextual errors: Misunderstanding or failing to retain conversation context over long exchanges. Systematic testing can involve creating benchmarks with expected outputs and comparing them to LLM responses, classifying errors as they emerge.

![]()

![]()