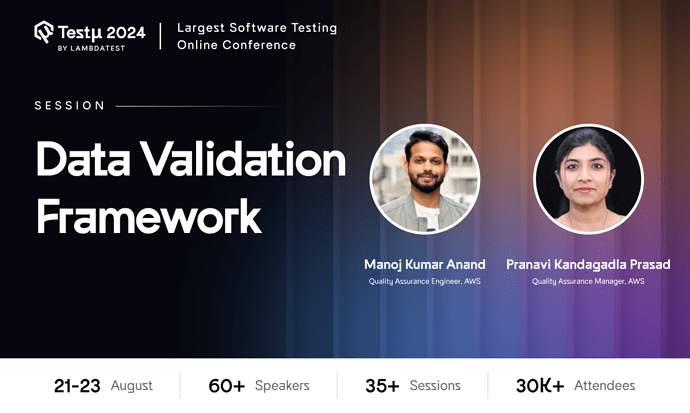

Manoj Kumar Anand and Pranavi Kandagadla Prasad from AWS will introduce a customizable data validation framework for file-based systems.

Built with AWS services, it automates checks like null, structure, and duplicate detection, offering a robust solution for data quality.

Not registered yet? Don’t miss out—Register Now.

Already registered? Share your questions in the thread below

Hi there,

If you couldn’t catch the session live, don’t worry! You can watch the recording here:

Here are some of the Q&As from this session:

What role does SQL play in supporting the framework’s validation checks across different data pipelines?

Manoj Kumar Anand and Pranavi Kandagadla: SQL plays a crucial role in querying and validating data across different pipelines. It helps in defining validation rules, aggregating data, and performing consistency checks, thereby supporting the framework’s ability to manage and ensure data quality across various sources.

Here are some unanswered questions that were asked in the session:

How do you automate the data validation process while ensuring flexibility for different data types and structures?

How can we understand the data validation framework on it?

Nice session, but I want to know about the basic components required to handle the data framework?

What would be the best approaches/practices for data validation for QA, as this will be a pillar requirement with the high demand for AI to have quality output?

With the increasing reliance on AI systems, ensuring high-quality data is more critical than ever. What are the best approaches or practices for implementing robust data validation in the QA process to guarantee the integrity and accuracy of data?

What were the key challenges you faced while designing a generic data validation framework for file-based target systems?

What are the best practices for creating a robust data validation framework that can adapt to evolving data sources and ensure consistency across diverse environments?

What are the essential components of an effective data validation framework, and how do they work together to ensure data integrity?

How does the framework handle common data checks like null checks, file structure checks, and duplicate checks in an automated manner?

Here are some of the Q&As from this session:

Are there any limitations to consider when using data validation frameworks when testing or training AI models?

Manoj Kumar Anand and Pranavi Kandagadla: Data validation frameworks might struggle with the dynamic nature of AI models and real-time data variations. They may not always capture subtle data quality issues or adapt quickly to changes in data patterns, which can impact the accuracy and reliability of AI model training and testing.

What are the essential components of a data framework?

Manoj Kumar Anand and Pranavi Kandagadla: Essential components include data ingestion, data cleaning, data transformation, validation rules, error handling, and reporting. These components ensure data quality and consistency throughout the processing pipeline.

How can a data validation framework be designed to handle real-time data streams without causing significant latency?

Manjoj Kumar Anand: To handle real-time data streams, design the framework with efficient data processing and validation techniques, such as stream processing and incremental validation. Use lightweight, optimized algorithms to minimize latency and ensure timely validation.

From my experience, the key is building modular validation rules that can adapt to various data types and structures. Using tools like AWS Lambda or Step Functions allows you to define specific validation rules for each data set, ensuring flexibility without sacrificing automation.

To get a solid understanding, break it down into its core components: data ingestion, validation logic, and output/reporting. The framework essentially uses AWS services to automate checks, but you can customize rules for things like null values, structure, and duplicates based on your project needs.

At a high level, the key components include:

-

Data Ingestion: How data is collected and fed into the system.

-

Validation Logic: The rules that handle checks like null values, structure, and duplicates.

-

Storage & Reporting: Where results are logged, and how alerts or reports are generated. AWS tools like Lambda, S3, and CloudWatch are often part of the stack.

When AI is involved, data quality is non-negotiable. I recommend automating as much as possible using a framework that handles edge cases and dynamic data types. Continuous monitoring and validation, paired with real-time alerts for discrepancies, is crucial.

One best practice is implementing data validation at multiple stages—right from data entry to ingestion and transformation. Use a framework that supports customizable rules for different data types, and make sure you integrate real-time checks for outliers, null values, and structural integrity.

![]()

![]()