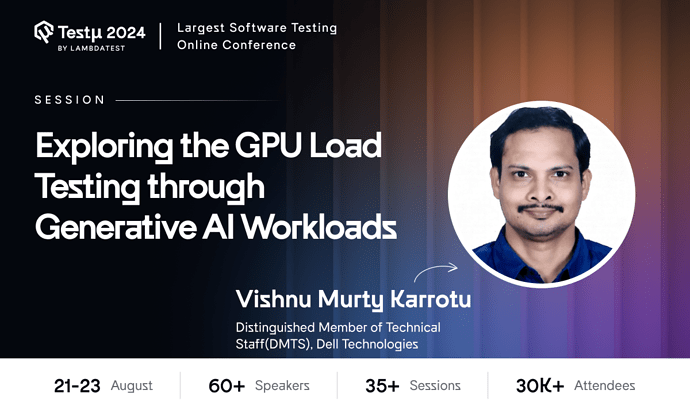

Join Vishnu, a Distinguished Member of the Technical Staff at Dell Technologies, as he unveils a groundbreaking methodology for GPU load testing. This talk combines generative AI workloads with Python-Docker orchestration and JMeter integration to create a comprehensive performance assessment framework.

Join Vishnu, a Distinguished Member of the Technical Staff at Dell Technologies, as he unveils a groundbreaking methodology for GPU load testing. This talk combines generative AI workloads with Python-Docker orchestration and JMeter integration to create a comprehensive performance assessment framework.

Explore how Nvidia GPUs and the Stable Diffusion model are used to simulate real-world scenarios for precise GPU load testing. Learn about Docker’s role in managing workload generation, Python scripting for AI model deployment, and JMeter’s integration for holistic testing.

Still not registered? Don’t miss this chance—grab your free tickets now: Register Now!

Already registered? Post your questions in the thread below

Hi there,

If you couldn’t catch the session live, don’t worry! You can watch the recording here:

Additionally, we’ve got you covered with a detailed session blog:

Here are some of the Q&As from this session:

How does GPU load testing differ when applied to generative AI workloads compared to traditional workloads?

Vishnu Murthy Karrotu: Traditional workloads primarily focus on the CPU, while generative AI workloads are more GPU-intensive. This shift towards GPU usage is due to the nature of AI tasks, which require extensive parallel processing. To ensure optimal performance, we run these generative AI processes in our lab, thoroughly testing them on the system before releasing them into the market.

Beyond GPU utilization, what other performance metrics did you track, and how did you correlate them with the generative AI workload?

Vishnu Murthy Karrotu: To optimize GPU performance during AI workload load testing, it’s essential to monitor not just the GPU but the entire system. Collect data on CPU, memory, thermal performance, and power usage alongside GPU metrics. Pay special attention to cooling (e.g., fan speeds) and power management, as these directly impact GPU efficiency. Visualize this data through dashboards to track performance trends and ensure the GPU operates within optimal parameters, preventing bottlenecks and overheating.

Have you explored using other generative AI models (e.g., GANs, VAEs) for GPU load testing? If so, what were the key differences and challenges?

Vishnu Murthy Karrotu: Yes, we’ve started exploring various generative AI workloads, including GANs and VAEs, to see how they impact GPU load. However, we’re still in the process of evaluating which models and use cases are most relevant to our testing needs. One challenge is that not all workloads are equally representative of customer use cases, so we’re working closely with our marketing and quality teams to identify the most widely used and impactful scenarios.

Additionally, some models require significantly more intensive testing due to their smaller size or complexity, which adds to the challenge of ensuring comprehensive coverage before releasing to the market. This is an ongoing learning process as we continue to refine our approach.

Would you recommend using same teck stack for windows .dll methods also ? if that how can we set up that ?

Vishnu Murthy Karrotu: Yes, using the same tech stack for testing Windows .dll methods can be beneficial for consistency and ease of integration. To set this up, you can use frameworks that support .dll testing, such as NUnit or MSTest in the .NET environment. These frameworks allow you to write and execute tests specifically for Windows .dll methods, and they can be integrated into your existing testing pipeline alongside other technologies.

Which metric is more important?

Vishnu Murthy Karrotu: The most important metric often depends on the specific goals of your GPU load testing. However, performance metrics like latency, throughput, and resource utilization are generally critical. In the context of generative AI workloads, measuring the efficiency of GPU usage and the time taken to process tasks can be particularly valuable in assessing the overall system performance.

Here are the some Unanswered Question of the session

What specific metrics and data should be collected during GPU load testing with generative AI to ensure comprehensive analysis?

Can you please throw some light on some of the AI features or any AI supporting tools for Loadrunner? Also what in general AI can we use for performance testing

How can GPU load testing be effectively integrated into a JaaS application to ensure that generative AI workloads are optimally balanced across available resources, while maintaining low latency and high throughput?

Hi! Why do not run LLM on GPU directly instead of running full server (backend, web api etc)?

generative Ai is effective with testing

Which AI tool best fit for System testing?

What are the most demanding GenAI computation loads that can be tested - and why?

What characteristics should a genAI to have in order to be capable of load testing GPUs? The whole testing infra should have a high throughput, to really loadtest the GPU. What are the most important KPIs to check during the test?

which ai tools fit for unit testing

Thank you for your question regarding the metrics and data collection during GPU load testing with generative AI.

To ensure a comprehensive analysis, the following specific metrics should be collected:

-

GPU Utilization: Monitor the percentage of GPU resources used during the load test to assess performance under varying workloads.

-

Memory Usage: Track the GPU memory consumption to identify any bottlenecks or limitations when running generative AI models.

-

Frame Rates (FPS): If applicable, measure the frames per second to evaluate the rendering performance of the generative models.

-

Latency: Measure the time taken to generate outputs from the AI model, which helps in understanding responsiveness under load.

-

Throughput: Assess the number of successful requests processed per unit time, which is crucial for understanding the system’s capacity.

-

Error Rates: Monitor any errors or failures that occur during testing to identify potential issues with model performance or infrastructure.

-

Temperature and Power Consumption: Collect data on GPU temperature and power usage to ensure the hardware operates within safe limits.

-

Performance Metrics from JMeter: Integrate JMeter to gather detailed HTTP request/response metrics, including response time and success rate for API calls made to the AI model.

By collecting and analyzing these metrics, we can gain valuable insights into the performance and reliability of GPU systems under generative AI workloads.

Thank you for your inquiry about AI features in LoadRunner and the broader applications of AI in performance testing.

AI Features and Tools for LoadRunner

-

Smart Scripting: LoadRunner incorporates machine learning algorithms to simplify script creation. It can auto-generate scripts based on recorded actions, reducing manual effort.

-

Performance Analytics: LoadRunner uses AI to analyze performance test results, identifying trends and anomalies that might not be immediately apparent. This helps in making data-driven decisions.

-

Dynamic Load Generation: AI algorithms can optimize load generation based on historical data, simulating realistic user behaviors and adjusting load patterns dynamically during tests.

-

Resource Utilization Insights: AI-driven analytics provide insights into system resource usage, helping teams pinpoint performance bottlenecks more efficiently.

-

Predictive Analysis: LoadRunner can utilize AI to predict future performance issues based on current trends and historical data, allowing teams to proactively address potential problems.

General AI Applications in Performance Testing

-

Automated Test Case Generation: AI can analyze application behavior and user interactions to generate test cases automatically, saving time and effort in the testing process.

-

Anomaly Detection: Machine learning models can be trained to recognize normal performance patterns and flag deviations, helping to identify potential issues early.

-

User Behavior Simulation: AI can model realistic user behavior patterns, allowing for more accurate load testing that reflects actual user interactions.

-

Performance Forecasting: AI tools can analyze historical performance data to predict future performance trends, enabling better capacity planning.

-

Continuous Performance Testing: AI can integrate with CI/CD pipelines to facilitate continuous performance testing, ensuring performance remains stable throughout the development lifecycle.

By leveraging these AI features and tools, organizations can enhance their performance testing efforts, leading to improved application reliability and user satisfaction.

If you have any further questions or need additional details, feel free to ask!

Integrating GPU load testing into a JaaS (Java as a Service) application is essential for optimizing generative AI workloads. To ensure that resources are balanced effectively while maintaining low latency and high throughput, consider the following strategies:

1. Dynamic Resource Management

-

Auto-scaling Capabilities: Implement auto-scaling to adjust GPU resources automatically based on real-time workload requirements, ensuring efficient resource allocation.

2. Efficient Workload Distribution

-

Intelligent Task Scheduling: Utilize advanced scheduling algorithms to evenly distribute generative AI tasks across multiple GPUs, preventing bottlenecks and maximizing throughput.

3. Integration with Load Testing Tools

-

JMeter Utilization: Integrate JMeter to simulate user requests and workloads, allowing you to assess GPU performance under realistic scenarios while monitoring latency and throughput.

4. Real-time Performance Monitoring

-

Monitoring Solutions: Leverage tools like Prometheus or Grafana for real-time monitoring of GPU metrics, focusing on utilization, memory consumption, and response times.

5. Latency Reduction Techniques

-

Utilizing Edge Computing: Deploy generative AI workloads closer to users with edge computing to significantly reduce latency by minimizing data travel distances.

6. Continuous Improvement through Feedback

-

Data-Driven Optimization: Collect performance data from load tests and operational environments to refine workload distribution strategies, using machine learning for predictive analysis.

7. Realistic Workload Simulation

-

Diverse Testing Scenarios: Conduct GPU load tests simulating a variety of generative AI workloads to evaluate performance and identify potential bottlenecks.

8. CI/CD Pipeline Integration

-

Ongoing Performance Validation: Incorporate GPU load testing into your CI/CD processes to evaluate the impact of code changes on performance, automating tests for continuous optimization.

By implementing these strategies, you can ensure that your JaaS application effectively handles generative AI workloads while optimizing resource utilization, minimizing latency, and maximizing throughput.

Running large language models (LLMs) directly on GPUs, as opposed to through a full server setup with a backend and web API, has distinct advantages and challenges that influence the choice of approach.

One significant advantage of direct GPU execution is performance. Leveraging GPU resources directly can lead to faster inference times, as there’s no overhead from additional layers like web servers or APIs. This setup often results in lower latency, making it particularly suitable for real-time applications where quick responses are critical. Additionally, for applications requiring intensive computation, running models directly on a GPU may be more cost-effective than maintaining a full server infrastructure, especially in short-term or experimental use cases.

However, there are notable challenges to consider. A full server setup allows for easier scalability, which is essential for handling multiple requests or users in production environments. Resource management becomes more complex when running directly on GPUs, as it may require manual handling of concurrent workloads. Moreover, a server backend facilitates smoother integration with other services, databases, and user interfaces, making it more practical for comprehensive applications.

Security is another important factor; running models directly exposes them to the client side, potentially introducing risks if sensitive data is involved. Furthermore, deploying and maintaining a direct GPU setup can be challenging in terms of updates, monitoring, and ensuring system uptime compared to a managed server environment.

In conclusion, the decision to run LLMs directly on GPUs or through a full server architecture depends largely on the specific use case, performance requirements, and infrastructure capabilities. Direct execution may be ideal for rapid prototyping or specific real-time applications, while a server-based approach is often more suitable for scalable, secure, and integrated solutions. If you have further questions or specific scenarios in mind, feel free to ask!

![]() Join Vishnu, a Distinguished Member of the Technical Staff at Dell Technologies, as he unveils a groundbreaking methodology for GPU load testing. This talk combines generative AI workloads with Python-Docker orchestration and JMeter integration to create a comprehensive performance assessment framework.

Join Vishnu, a Distinguished Member of the Technical Staff at Dell Technologies, as he unveils a groundbreaking methodology for GPU load testing. This talk combines generative AI workloads with Python-Docker orchestration and JMeter integration to create a comprehensive performance assessment framework.![]()

![]()