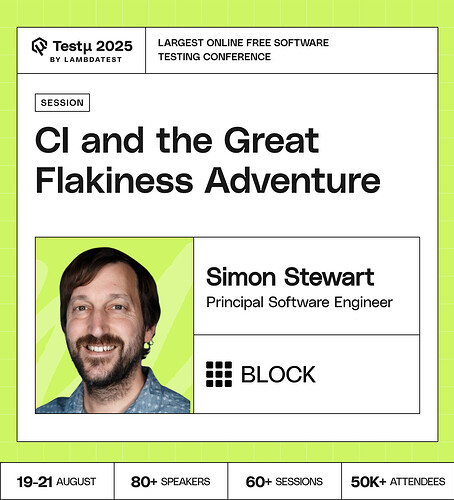

Join Simon Stewart, Software Engineer at Block and former Selenium project lead, dives into CI and the Great Flakiness Adventure. Explore how flaky tests undermine confidence in CI/CD pipelines, and the practical techniques you can use to reduce, manage, and even embrace them in certain cases.

From root-cause analysis to better build strategies, Simon shares insights gained from years of experience leading Selenium releases and tackling flakiness head-on.

Book your free spot today and strengthen your CI pipeline!

Book your free spot today and strengthen your CI pipeline!

Here are some of the questions from the session:

Can we automatically fix failed test like self healing through AI?

What’s the most surprising root cause of flakiness you’ve ever discovered?

Is it a correct approach to set 1 or 2 retries if a test fails? At least the test is flagged as a flaky one, and not a bug?

If I’m running a single test say 1000 times and if it fails 67 times, would we call it flaky test ?

Can AI/ML realistically predict flakiness before a test is even committed?

What cultural or process shifts can teams adopt to treat flakiness as a priority, not a nuisance?

How can we define and quantify “flakiness” in a way that allows for objective measurement and comparison across various testing environments and software systems?

What are some of the lesser-known or often-overlooked factors that contribute to test flakiness, and how can tests and testing strategies be designed to address them effectively?

The AI Alchemist: We often give our teams powerful AI “hammers,” and suddenly every problem looks like a nail. How do we teach them the art of AI alchemy, knowing when to use an AI “scalpel” for precision, a “scaffold” for support, or a “cauldron” for

The path to AI fluency is often foggy with uncertainty and hype. What is the most important “lighthouse” (a core principle or a clear goal) you’ve built to help your team navigate through the fog without getting lost or discouraged?

How do we train our teams to be discerning art critics rather than passive consumers of AI content? What’s one technique you use to help them spot the “ghost in the machine”, the subtle biases, hallucinations, or logical flaws in AI output?

Many teams reach a plateau where they use AI for basic tasks but stop exploring its deeper potential. What “spark” or creative challenge can you introduce to push them beyond this plateau, from being mere AI users to becoming AI innovators?

AI excels at answering questions that already have answers. How do you coach your team to focus their human intellect on what AI can’t do: formulating the novel, strategic, “un-Googlable” questions that drive true business value?

Instead of framing AI around tasks it will replace, how can we reframe it as an amplifier for uniquely human skills? What’s one way you’re actively using AI to magnify your team’s empathy, strategic judgment, or creativity?

Sometimes the root cause in a detected flaky test can be in the application? For example due race conditions.

How can flaky tests impact build stability and developer confidence?

What are the most common causes of test flakiness in CI pipelines?

What metric or Dashboard do you recommend to track flakiness over time so that teams can prioritize fixing the most critical ones first?

![]() Book your free spot today and strengthen your CI pipeline!

Book your free spot today and strengthen your CI pipeline!