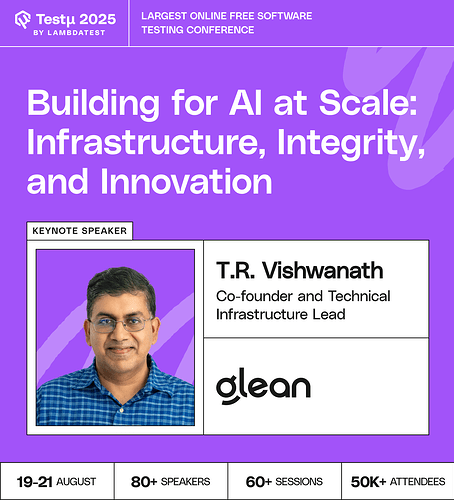

Join T.R. Vishwanath, Co-Founder of Glean, as he explores what it takes to build AI systems at scale with the right balance of infrastructure, integrity, and innovation.

Discover strategies for designing robust infrastructure, validating AI behavior with reliability, and embedding trust, privacy, and safety into enterprise-ready AI.

Learn how to turn customer challenges into opportunities for product innovation without overfitting while ensuring AI systems remain scalable, secure, and future-ready.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

As AI workloads become more complex, how can organizations ensure their infrastructure remains both scalable and cost-effective without compromising performance?

With the increasing integration of AI across various sectors, what best practices can be adopted to maintain data integrity and security throughout the AI lifecycle?

How would you architect a scalable approach to test and oversee AI systems whose outputs are non-deterministic?

How do you verify reliability and safety for AI that won’t produce the same answer twice, at scale?

When scaling AI from a pilot to an enterprise-wide system, what is the most common infrastructural bottleneck that teams fail to anticipate?

How do you detect and mitigate bias or unintended consequences in AI outputs at scale?

As the system scales, how do you manage the escalating costs of inference, and what are the key trade-offs between model performance and operational expense?

How do you build a system to effectively validate unpredictable AI behavior at scale? What does that testing and monitoring framework look like in practice?

What is the most critical “guardrail” or integrity check you’ve implemented at Glean to prevent a scaled AI system from producing harmful or untrustworthy results?

What is the best way to structure an engineering organization to balance rapid experimentation with the need for foundational integrity and reliability?

What are the key infrastructure bottlenecks organizations face when scaling AI initiatives?

Do you think innovation in AI hardware (chips, GPUs, TPUs) will outpace improvements in software optimization—or vice versa?

How do you ensure ethical guardrails scale along with infrastructure, so innovation doesn’t compromise trust?

How should organizations foster a culture of collaboration between AI experts, IT teams, and domain experts to drive innovation in large-scale AI projects?

Which cloud-native tools or architectures best support AI scalability?

How do you ensure AI decisions are explainable and auditable in enterprise systems?

How do innovations like MCP servers, GPUs, TPUs, and specialized accelerators reshape AI scaling strategies?

How can you shape the infrastructure security using AI, what tools are recommended?

How do we balance the need for scalable infrastructure with the growing concerns around data privacy and integrity in AI systems?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now