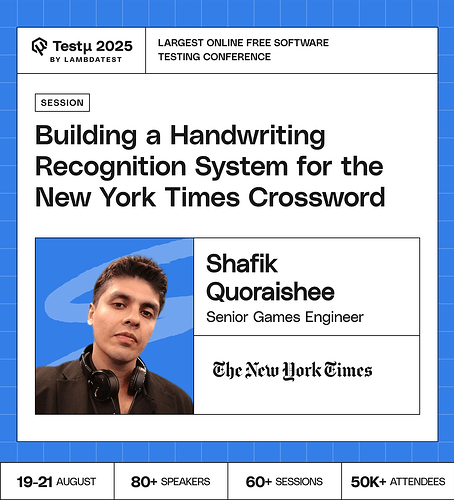

Join Shafik Quoraishee, Engineer at The New York Times, as he shares how the team built an experimental handwriting recognition system for the NYT Crossword app.

Join Shafik Quoraishee, Engineer at The New York Times, as he shares how the team built an experimental handwriting recognition system for the NYT Crossword app.

Discover how they turned crossword squares into interactive “SketchBoxes,” trained Deep-CNNs on diverse handwriting, and integrated TensorFlow Lite for on-device inference.

Gain insights on preprocessing handwriting, balancing responsiveness with accuracy, and how MLQA ensures vision models perform reliably in production.

Don’t miss out, save your spot now!

Don’t miss out, save your spot now!

Can the handwriting recognition system apply to other word games or other game-like experiences using writing and drawing, and if so, how?

How do you address accessibility factors for people with limited handwriting capability but want to engage with the NYT Crossword puzzle app?

What trade-offs do you see in deploying handwriting recognition on-device for real-time crossword solving versus relying on cloud inference?

How do you measure test quality without just counting test cases?

Are you tracking defect escape rate or just how fast bugs get fixed?

Is your AI helping you find what to test or just helping you test what you already knew?

How will the preprocessing steps (downscaling and binarizing process) be optimized to keep up efficient performance on a mobile device while maintaining the quality of user-drawn characters for the deep convolutional neural network (Deep-CNN)?

How does this experimental handwriting recognition feature compare to or differentiate itself from other existing handwriting recognition systems or input methods available on mobile devices?

What are the potential future directions or expansions for this handwriting feature, such as exploring integration with other NYT Games or extending the functionality to include other input modalities?

Given the variant visible output of the ML model, what specific methodologies and metrics will be used by the MLQA team to make sure of the accuracy and reliability of the handwriting recognition feature?

How can data augmentation techniques be used effectively to create diverse training samples that reflect real user handwriting variations, such as off-center or tilted characters?

How does the system handle variations in handwriting styles ?

What was the toughest challenge in building handwriting recognition for something as tricky as crosswords?

What is the optimal approach for integrating contextual language models (to leverage clue/answer pairs) into the handwriting recognition process for crossword-specific lexicon adaptability?

How might transfer learning or fine-tuning be best leveraged to expand from digit recognition to full alphanumeric and punctuation recognition for crosswords?

We say the model is learning but is it really learning?

Absolutely, handwriting recognition systems can extend far beyond traditional word games.

They can power a whole range of interactive, creative, and educational experiences that involve writing or drawing.

For example, imagine a drawing-based puzzle where players sketch clues that AI interprets, or a language-learning app that checks handwritten characters or vocabulary in real time.

Even games like Pictionary, math quizzes, or calligraphy-based challenges can use handwriting recognition to score accuracy, speed, or creativity.

That’s a thoughtful question and accessibility should absolutely be part of any handwriting-driven experience.

For players with limited handwriting ability, the NYT Crossword puzzle app (or similar games) can offer alternative input modes that maintain the same engagement without relying solely on handwriting.

Options like on-screen keyboards, voice input, or even predictive text entry can make gameplay more inclusive.

AI can also assist by recognizing partial strokes or adapting to a player’s unique writing style over time.

In essence, accessibility isn’t about removing handwriting it’s about adding flexibility so every player, regardless of physical ability, can enjoy the same challenge and satisfaction.

That’s a great question and it’s one of the biggest architectural decisions in designing handwriting recognition systems.

Running recognition on-device gives you faster feedback, offline support, and stronger privacy since user data never leaves the device.

However, it can be limited by processing power, model size, and update flexibility.

In contrast, cloud inference allows for more powerful models, continuous updates, and better accuracy but it introduces latency, requires internet access, and raises potential privacy concerns.

- On-device: Low latency, works offline, more private but less powerful models.

- Cloud-based: Higher accuracy and easier updates but slower and dependent on connectivity.

- A hybrid approach often works best: lightweight on-device inference for instant response, backed by periodic cloud validation for accuracy improvement.

So, the real goal is balance keep gameplay smooth and responsive locally, while using the cloud to learn and improve over time.

![]() Join Shafik Quoraishee, Engineer at The New York Times, as he shares how the team built an experimental handwriting recognition system for the NYT Crossword app.

Join Shafik Quoraishee, Engineer at The New York Times, as he shares how the team built an experimental handwriting recognition system for the NYT Crossword app.![]() Don’t miss out, save your spot now!

Don’t miss out, save your spot now!