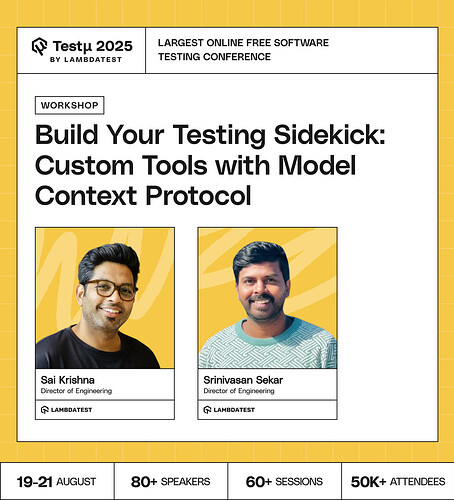

Join Sai Krishna and Srinivasan Sekar as they introduce the Model Context Protocol (MCP) and show you how to build your very own AI-powered testing sidekick.

Discover how MCP redefines test automation by enabling tools that understand your test contexts, generate intelligent scenarios, and integrate seamlessly with existing frameworks.

Learn practical strategies for creating custom MCP testing tools, integrating solutions like Appium Gestures and WebDriverAgent, and applying security best practices for production-ready deployments.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What is a good rule of thumb for 1) using MCP and spending time refactoring MCP tests, vs 2) writing the tests yourself with use of an AI agent? Or - how do we avoid MCP becoming counterproductive?

How seamlessly can MCP-based assistants integrate into CI/CD pipelines without adding bottlenecks?

Can AI truly understand user experience, or is it just simulating what it thinks we want?

Since MCP tools plug into frameworks and handle sensitive test data, what are the biggest risks of misuse or leakage, and how should QA teams balance experimentation with secure deployment?

How can testers practically use Model Context Protocol (MCP) to build lightweight AI sidekicks that adapt to their product context without requiring heavy ML expertise?

How will the testing sidekick use the MCP to dynamically understand the current state of the application under test (such as user flow, changed code modules, user persona, specific environment)?

How can we use Model Context Protocol custom tools to test an Electron app with Playwright?

Could MCP server solve in-depth sdk testing or testing the code itself and configurations like UAT?

How will the testing sidekick make sure of more robust error handling and managing situations where MCP servers might be unavailable or return unexpected data?

How can MCP assist in auto-generating test cases from user stories or acceptance criteria?

How do we debug the AI’s decision-making process? If the AI generates an irrelevant test case or suggests a bad fix, what’s the workflow to troubleshoot and correct its behavior?

In what practical ways can testers apply Model Context Protocol (MCP) to build adaptive AI sidekicks tailored to their product environment, while avoiding complex ML know-how?

What strategies can be employed to make sure the custom testing tool, integrated with MCP, scales effectively for larger-scale applications and more complex test scenarios?

Is it better to keep a library of UI scenarios that auto-generate Playwright tests with MCP whenever needed, or just use AI once to build the framework and then maintain the tests ourselves?

How does MCP handle highly domain-specific failures in a complex business application versus common exceptions, and does the model require extensive training on our specific application’s codebase?

What’s the typical ratio of writing test-specific logic versus configuring the AI model? How much machine learning expertise does a test engineer really need to build and maintain these assistants effectively?

When using a tool like MCP Appium Gestures, how does the AI handle app-specific or custom UI elements? Does it rely on standard accessibility IDs, or can it visually interpret the screen to find components?

How can we stop an AI sidekick from being ‘too creative’ with test authoring while still leveraging MCP to generate novel edge cases humans might miss?

What’s the easiest way to start building a testing sidekick without getting lost in complex setup?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now