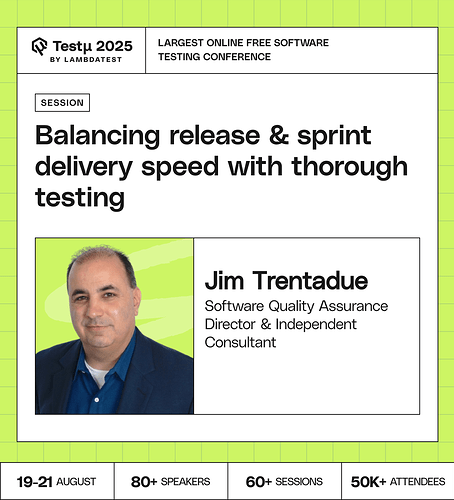

Join Jim Trentadue as he explores how testing teams can balance faster release and sprint delivery speeds with thorough testing without compromising quality.

Discover techniques to accelerate test case creation, speed up execution runs, and shorten feedback loops so teams can make faster, more informed decisions.

Learn practical approaches, including the role of AI, to boost efficiency in test design, execution, and validation, helping testers meet delivery commitments while maintaining confidence in product quality.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What strategies do you use to maintain high-quality testing without slowing down sprint delivery?

In what ways can AI practically assist with test design, prioritization, and execution?

In a tight release crunch, which test category is the least risky to scale back: unit, integration, UI, or performance?

How do you decide which tests to prioritize when deadlines are tight but quality can’t be compromised?

How do you balance the need for rapid test creation for new features with the equally important but time-consuming task of maintaining and refactoring the existing automation suite?

If shipping on time required reducing test scope, which would be your first candidate for reduction: unit, integration, UI, or performance tests?

When management is pushing to “compress schedules,” what’s the most effective way to communicate the risks of sacrificing quality without sounding like a roadblock to progress?

When using AI to accelerate test case creation, what’s the most effective way to validate the AI’s output to ensure it’s not just generating plausible-sounding but ultimately low-value or redundant tests?

What are the biggest challenges QA/QE teams face when balancing the need for quick feature delivery with maintaining code quality and minimizing technical debt?

Are testers spending more time maintaining flaky tests than actually uncovering new bugs?

How can QA activities be shifted earlier in the development lifecycle to become a more proactive part of the quality process, reducing end-of-sprint bottlenecks?

How should the overall impact of randomized testing on software quality and development velocity be measured?

What impact does a “release-ready” mindset have on the way teams approach writing code and perform unit/integration tests?

How can dev and testing teams define “done” in a way that truly encompasses both speed and quality, making sure that increments are truly releasable at the end of each sprint?

What are the key metrics that teams should be tracking to understand the balance between delivery speed and quality?

If you had to cut one thing to meet a release deadline, what would you sacrifice first: Unit tests, Integration tests, UI tests, or Performance tests?

Do you believe “fast releases with some risk” are better than “slow but stable releases”?

What’s the biggest challenge in keeping testing thorough while meeting sprint and release deadlines?

How can testers ensure quality isn’t compromised when release velocity keeps increasing?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now