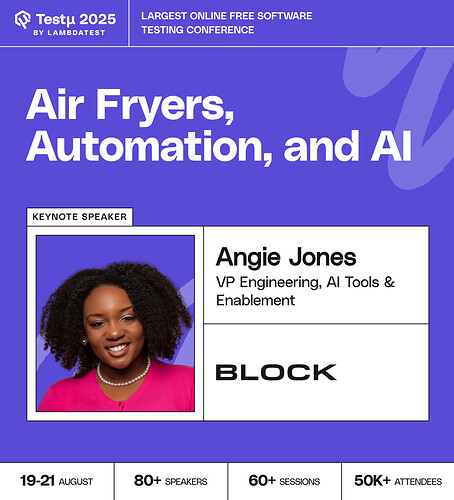

Angie Jones, VP Engineering, AI Tools & Enablement at Block, Inc., takes you on a journey through air fryers, automation, and AI, an unexpected trio with powerful lessons for testing. Learn why automation tools remain essential, even as AI agents unlock new possibilities for complex workflows.

You’ll get insights into AI Agents and MCP, see real demos of AI in test automation, and walk away with 10 actionable ideas you can apply immediately to level up your automation strategy.

Secure your free spot today and explore what’s next in automation! Register Now

Secure your free spot today and explore what’s next in automation! Register Now

As a QA fresher, should I focus on learning traditional automation testing first, or directly move towards AI-powered automation tools?

Without any automation in place right now, is Goose a good place to start? If yes, where is a good place to start learning it?

which LLM is best for testing?

I hear this question often, and the answer is nuanced. As a QA fresher, building a strong foundation in traditional automation is crucial.

Understanding frameworks like Selenium, API testing with Postman, or unit testing concepts gives you a clear sense of how tests are designed, maintained, and integrated into CI/CD pipelines.

Without this base, jumping straight into AI-powered tools can feel like using a black box, you might struggle to debug, optimize, or trust the outputs.

That said, it’s smart to stay aware of AI-driven tools and explore them gradually.

Practical approach:

- Start with core automation skills: scripting, test frameworks, CI/CD basics.

- Experiment with AI-assisted test generation or codeless tools to understand their advantages.

- Focus on problem-solving and analyzing failures; AI should augment your skills, not replace them.

- Look for hybrid projects where traditional and AI-driven approaches coexist.

This strategy ensures you build credibility as a QA professional while positioning yourself to leverage AI effectively.

If you’re starting from zero automation, Goose can be a solid option, especially if your main need is load and performance testing.

It’s built in Rust, which makes it lightweight and very fast compared to older tools like JMeter.

But Goose shines when you already have some programming comfort. If your team has no coding background, the learning curve may feel steep at first.

How to approach it:

- Begin by reviewing Goose’s official documentation and examples on GitHub.

- Start small: simulate a simple user journey like login and logout before scaling.

- Run locally first, then expand into distributed load tests.

- Compare Goose with alternatives like Locust to ensure it fits your context.

So yes, Goose is a good start if performance is your immediate need, but pair it with a plan to build general automation skills in parallel.

That’s a hot topic right now. The truth is, there isn’t a single “best” LLM for testing, it depends on your use case and the maturity of your QA process.

Broadly, LLMs are helpful in three areas: generating test cases from requirements, analyzing logs or failures, and assisting with documentation or exploratory test ideas.

Here’s how I’d frame it:

-

General-purpose LLMs (like GPT-4/5, Claude, or Gemini) are great for brainstorming scenarios, creating test data, or translating requirements into test steps.

-

Domain-specific or fine-tuned LLMs (for example, those trained on your product or codebase) bring much more accuracy but require setup and data.

-

Open-source models (like LLaMA or Mistral) are valuable if data privacy is critical, but they demand infra and tuning effort.

Common mistake: teams expect an LLM to generate “production-ready” tests instantly. In practice, you still need human review to refine cases, validate coverage, and integrate them into automation frameworks.

If you’re just getting started, I’d recommend experimenting with a general-purpose LLM for lightweight tasks, then move toward fine-tuning or open-source when your org is ready for deeper integration.

![]() Secure your free spot today and explore what’s next in automation! Register Now

Secure your free spot today and explore what’s next in automation! Register Now