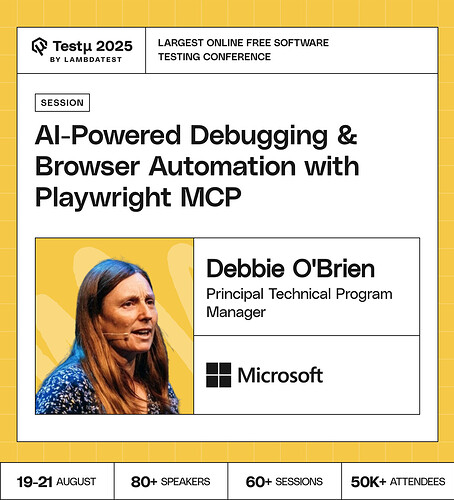

Join Debbie O’Brien, Senior Technical Program Manager at Microsoft, as she demonstrates how AI is transforming Playwright with Playwright MCPmaking debugging smarter, test creation faster, and browser automation more adaptive.

Discover how AI-powered debugging identifies flaky tests, accelerates test generation, and makes browser interactions resilient to UI changes, helping you work faster and smarter.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What level of expertise (or upskilling) is required for QA and development teams to effectively use Playwright MCP’s AI capabilities?

As Playwright MCP makes browser interactions more adaptive, how can QA validate that this adaptability doesn’t mask real issues introduced by UI changes?

What kind of bugs can AI-powered debugging catch better than humans with Playwright?

In what specific scenarios does Playwright MCP’s AI excel at uncovering edge cases or anomalies that traditional, static test automation might miss, and how does it achieve this?

How do you control Input Tokens when you include this MCP in a custom Agent as all the tools go in the API Calls as input tokens increasing tokens to 50-60k per API call. With this do you see it being used when we have to run thousands of tests daily

Does Playwright support mobile-native application testing?

Does playwright mcp support self-healing locators?

In multi-browser testing with Playwright MCP, different browsers often behave differently. How can AI-powered debugging help identify whether an issue is browser-specific or a true application bug?

How to generate great Prompts for Testing with AI and then show the probability of getting the most out from them ?

How instructions are different from prompts ?

Can you share the guidelines in chat? Also is there way to auto discover object locators to enable going from user stories to automation ?

Is it better to keep a library of UI scenarios that auto-generate Playwright tests with MCP whenever needed, or just use AI once to build the framework and then maintain the tests ourselves?

Does the AI ever produce passing tests that aren’t actually passing (false positives)?

How does Playwright MCP use AI to boost the debugging process for browser automation scripts, particularly in identifying and resolving flaky tests or unpredictable UI element behavior?

Can you suggest ways debug issues stemming from race conditions when tests run in parallel?

Also can you address what to do when we run out of our monthly allotment of Claude tokens. We have been trying to find where to add more.

In multi-browser testing with Playwright MCP, different browsers often behave differently. How can AI-powered debugging help identify whether an issue is browser-specific or a true application bug?

Are these agent modes like generator exclusive to VS code only or VS code forks will work with it as well and show them and use them?

How does MCP handle UI changes that are non-deterministic or context-specific, like dynamic content or randomized layouts?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now