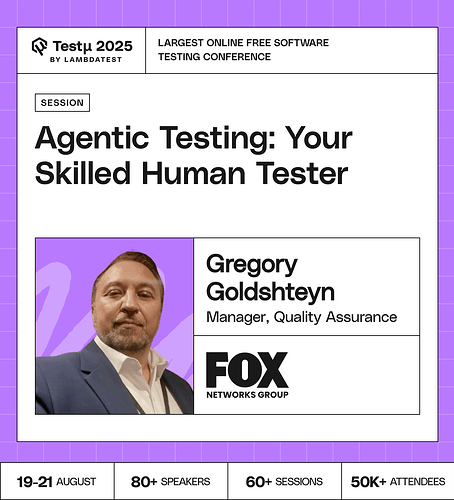

Join Gregory as he introduces the future of automation with agentic testing, your intelligent teammate that thinks and adapts like a skilled human tester.

Discover how agentic workflows act, observe, reason, and learn to deliver smarter, more reliable test automation that integrates seamlessly into your development lifecycle.

Learn how this new approach goes beyond traditional automation, empowering QA teams with adaptive testing that improves efficiency, collaboration, and continuous quality.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

How do you see the role of human testers evolving as agentic AI takes on more complex testing tasks?

How should senior engineers decide when to dive into AI implementation details versus when to zoom out and focus on broader design principles?

How will human testers’ roles change with agentic AI?

How do senior engineers ensure they remain technically grounded in AI while still communicating design trade-offs and architecture clearly to non-experts?

How does an agentic testing system handle ambiguous requirements or user stories? Can you provide an example of how it “reasons” through ambiguity to create a valid test plan?

How does the introduction of an “intelligent teammate” change the day-to-day role and skillset required of a human QA engineer?

What does the initial “training” or “onboarding” process look like for a new agentic testing system on a complex, existing application?

How critical is it for senior engineers leading AI initiatives to have hands-on experience with model development versus focusing purely on architecture and strategy?

In what ways can agentic AI be sufficiently humanized that it can be called a “skilled human tester”?

What role might humans play when agentic AI might be the skilled human tester - in terms of intuition or intelligence?

Are there bugs only humans can catch?

What are the key ethical considerations and risks associated with giving AI agents more autonomy in testing, and how can human testers ensure responsible and unbiased testing practices?

Agentic testing what skillset is required from in contrast to traditional software testing?

How should senior engineers balance deep technical knowledge in AI with the ability to abstract and communicate high-level system design?

What’s harder for AI agents: exploring edge cases or understanding user emotions?

How can senior engineers foster a culture of experimentation and innovation while maintaining production reliability in AI systems?

Testing with AI agents feels like: playing chess, solving Sudoku, or debugging spaghetti code?

What types of bugs are humans still better at catching than AI?

Can agentic testing reduce tester burnout or will it add complexity?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now