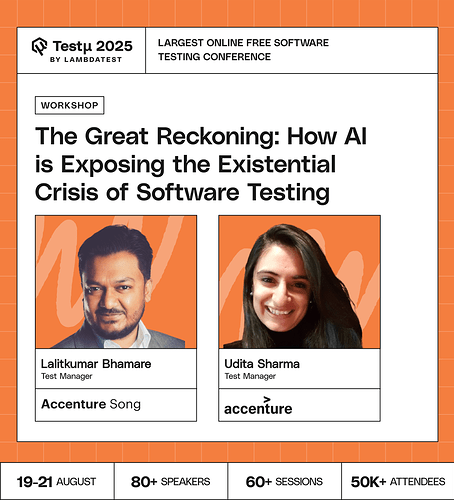

Join Lalitkumar Bhamare, Productivity Engineering and Quality Coach at Accenture Song, and Udita Sharma, Test Manager at Accenture, as they explore how AI is reshaping the software testing profession.

Learn why the future favors testers who can adapt intellectually, turn information into insight, and elevate testing into a strategic intelligence function.

Discover how to transition from producing test cases to generating actionable business insights and develop cognitive architecture skills that drive smarter decisions.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

AI can already generate scripts and find bugs, what does it actually mean to be ‘skilled’ in testing today?

If AI can test better and faster, what is the true value of human testers now?

With AI highlighting the limitations of traditional testing metrics that focus on creating an illusion of control rather than actual effectiveness, how should the definition of “software quality” evolve?

How do you depict the QA engineer in the loop in 2026? What skills will be highest priority and which skills won’t be necessary? Ho do we stay interesting for businesses?

How has AI changed how you prioritize which tests to run? Do you spend more time on edge cases now?

Beyond automating existing testing processes, how can AI be used to fundamentally reimagine the goals and strategies of software testing, exploring new frontiers and types of analysis that were previously very challenging?

If AI is exposing that testing was never a well-defined discipline, how should we now redefine what it truly means to be ‘skilled’ in testing?

Have you ever had to flag an AI-generated test result that felt wrong even if the numbers looked good? What was that conversation like with stakeholders?

Does relying too much on AI in testing risk creating a “false sense of quality,” where software passes tests but fails real-world expectations?

With AI in the mix, how do you now prioritize tests, and are edge cases receiving greater attention?

How has AI impacted your test selection process, do you find yourself investing more effort into edge scenarios?

With AI integrating with CI/CD pipelines, how might the traditional role of a tester as a gatekeeper of quality change?

How do we validate and trust AI-generated test cases when the AI itself may introduce hidden biases or blind spots?

Where should we draw the line between what AI can test effectively and what still requires human intuition?

If AI can write and test code, what happens to the traditional role of a tester, do we evolve, or dissolve?

As AI takes over more parts of the testing lifecycle, should we start treating test engineers as prompt engineers?

Is AI accelerating the obsolescence of traditional QA roles, or simply reshaping them?

Imagine knowing which tests will fail before we even run them. If we’re tired of reactive testing and endless script maintenance, wouldn’t we be exploring predictive AI tools, and how we can apply it in our projects today?

How can we assured that by involving AI in daily work doesn’t violate the data, as we know AI store the data and learn from the data so how can we secure the data?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now