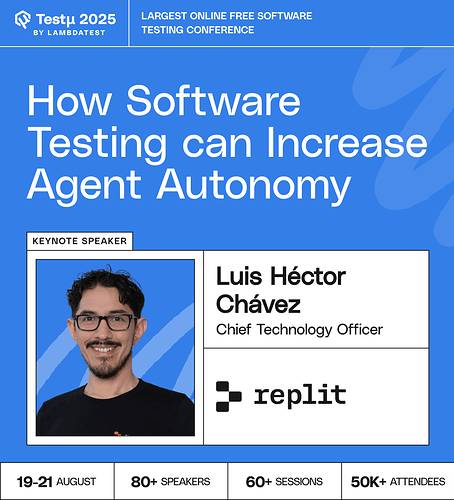

Join Luis Héctor Chávez, CTO at Replit, as he explores how software testing must evolve to keep up with AI agents that write code.

Learn why tests are more critical than ever, how multi-modal testing addresses agent errors, and how best practices in software engineering guide AI-driven development.

Discover practical approaches to increasing agent autonomy while maintaining code reliability and quality in AI-powered workflows.

Don’t miss out, book your free spot now

Don’t miss out, book your free spot now

What role can testers play in making AI agents more autonomous without losing control or safety?

As coding shifts more toward AI-driven development, what is the single most important capability testers need to build for the future?

When migrating tests to BiDi-based frameworks, what are the most common pitfalls?

What key skill will keep testers valuable as AI takes on more of the coding workload?

How can software testing frameworks be designed to safely increase autonomous decision-making in agents, while ensuring reliability and accountability?

How can software testing frameworks be designed to effectively identify and validate the decision-making capabilities of autonomous agents in various scenarios?

What metrics can be used to objectively measure the level of autonomy an agent possesses, and how can software testing be structured to track and improve these metrics over time?

Are there established KPIs or metrics to measure an AI agent’s autonomy, and how can testing validate improvements in these metrics?

How can software testing frameworks be designed to safely increase autonomous decision-making in agents, while ensuring reliability and accountability?

With AI as future, Will engineer role is shifting more towards Strategic engineer?

How can the integration of AI agents within the testing process facilitate a “shift-left” approach?

What strategies within software testing can be used to validate the ethical decision-making capabilities of autonomous agents in situations involving trade-offs or conflicting goals?

How can test data generation and augmentation techniques be optimized to create challenging and varying scenarios that push the limits of an agent’s autonomous reasoning/adaptation abilities?

As AI begins to write more code, what’s the number one skill testers should learn now to stay valuable and effective in the future?

As agents become more autonomous, how can testing frameworks ensure that autonomy does not compromise safety, reliability, or ethical considerations?

What role does test architecture play in validating autonomous behaviors in agents?

How does testing help agents make smarter choices without constant human oversight?

How can shift-left testing principles be applied in AI agent development to proactively increase agent autonomy from early stages?

How can AI-powered test case generation and exploration empower agents to proactively discover and address edge cases, ideally leading to greater autonomous problem-solving abilities?

![]() Don’t miss out, book your free spot now

Don’t miss out, book your free spot now